Welcome to all of you in my blog, this post is all about basic blockchain and how it works actually. I came across lots of blogs (not in sap) where people discussed lots about blockchain, the funny one was ‘how i explained blockchain to my grandma’. But i always wonder what it means to developer like me, how can we implement blockchain from scratch no more api’s lets build everything from scratch. Before jumping into code i would like to explain some core technical concept which is very important.

A distributed database that maintains a continuously growing list (Blocks) of ordered which can be read by anyone. Nothing special, but they have an interesting property means to add any block into blockchain block has to satisfy some property: they are immutable. Once a block has been added to the chain, it cannot be changed anymore without invalidating the rest of the chain.

And this is one of the reason why all cryptocurrencies are like Bitcoin ,Ethereum, Litecoin etc are based on blockchain. Because no one want to change their previous transaction which they have already made.

As a blockchain developer first most important task is to decide structure of block. In our example we will keep it very simple and which are most necessary component: index, timestamp, data, hash and previous hash. Now all components are self explanatory . Index is nothing but Block index , Timestamp means committed time for that particular when it got added to blockchain, hash is SHA256 Hash value for that particular block , Previous hash is SHA256 Hash value for Previous Block.

The hash of the previous block should be present in the block to form a valid chain or preserve the integrity .

Genesis Block

Genesis block is nothing but first block in blockchain , it is basically dummy block to start the blockchain , in Bitcoin Blockchain the value of genesis block is 0. Mostly software developer hard code it to utilize the blockchain.

Block Hash

To keep the integrity of blockchain all Block need to be hashed in SHA256. Those who are not familiar with SHA256 Hash , its encryption function like MD5 and SHA1 and once you encrypt any plain text using this , there is no chance to get it back in normal form.

Adding Block

To add a block in blockchain we should know the hash of the previous block and create the rest of the required content (= index, hash, data and timestamp). Block data will be provided by end user .

“Talk is cheap. Show me the code.” -Linus Torvalds

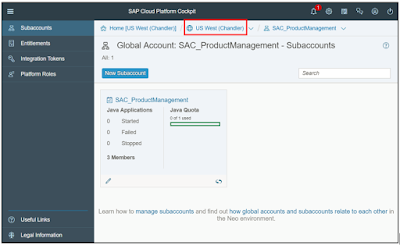

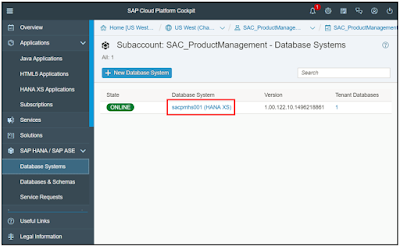

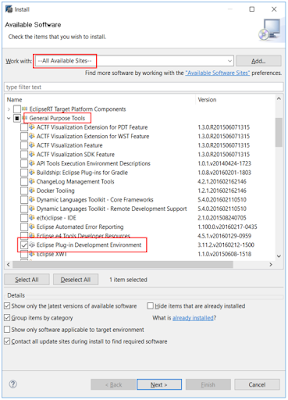

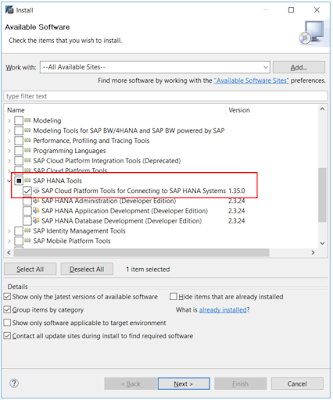

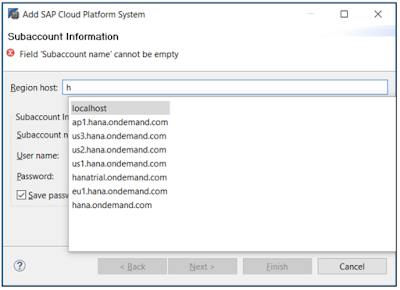

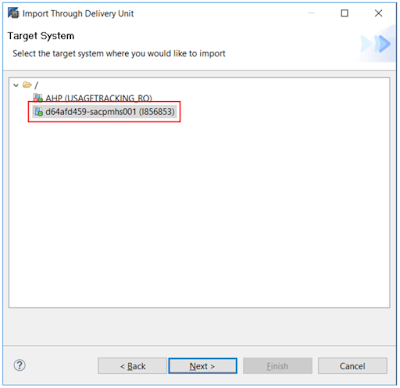

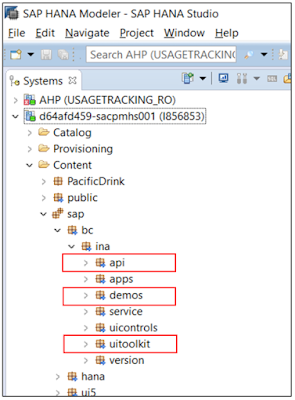

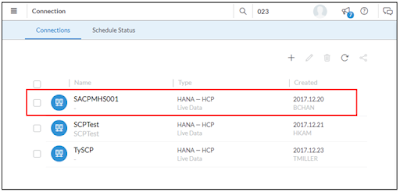

Lets Look at the stuff , which makes actual sense for developers . I have used Sap Cloud Platform Developer (trial) account and used MDC Hana trial to build whole demo . If you haven’t used MDC hana trial in your Sap Cloud Platform trial account Please have look at MDC trial Setup in SCP. Sap tutorial provides cool guide for that .

Defining Block Structure

First i have defined a schema called “HELLOBLOCK”. My design time artifact or schema.hdbschema file look like .

schema_name = "HELLOBLOCK";

Then i have created a column table called Blockchain with all necessary fields . My entity.hdbdd look like .

namespace HelloBlock.DBARTIFACT;

@Schema: 'HELLOBLOCK'

context entity {

@Catalog.tableType: #COLUMN

Entity BlockChain{

key index : Integer;

data : LargeString;

commit_at : UTCTimestamp;

previous_hash : LargeString;

current_hash: LargeString;

};

Adding Block in Blockchain

I have created a simple procedure to insert entry in blockchain table which will be called from xsjs based on validation of block .

procedure code :

PROCEDURE "HELLOBLOCK"."HelloBlock.Procedure::InsertBlock" (

IN block_index Integer,

IN block_data Nclob,

IN block_commit TIMESTAMP,

IN prevblock_hash Nclob,

IN Currblock_hash Nclob

)

LANGUAGE SQLSCRIPT

SQL SECURITY INVOKER AS

--DEFAULT SCHEMA <default_schema_name>

-- READS SQL DATA AS

BEGIN

/*************************************

Write your procedure logic

*************************************/

INSERT INTO "HELLOBLOCK"."HelloBlock.DBARTIFACT::entity.BlockChain"("index","data","commit_at","previous_hash","current_hash")

VALUES (:block_index,:block_data,:block_commit,:prevblock_hash,:Currblock_hash);

END

Hana xsjs service to add block in blockchain .

var acmd = $.request.parameters.get("action");

var BlockData = $.request.parameters.get("data");

var BlockIndex = $.request.parameters.get("index");

var PrevBlockHash = $.request.parameters.get("prevhash");

function DisplayBlockChain() {

try {

var conn = $.db.getConnection();

var output = {

results: []

};

var query = 'select * from "HELLOBLOCK"."HelloBlock.DBARTIFACT::entity.BlockChain"';

var myStatement = conn.prepareStatement(query);

var rs = myStatement.executeQuery();

while (rs.next()) {

var record = {};

record.Index = rs.getInteger(1);

record.Data = rs.getNClob(2);

record.CommitedTime = rs.getString(3);

record.PrevBlockHash = rs.getNClob(4);

record.CurrentHash = rs.getNClob(5);

output.results.push(record);

}

rs.close();

myStatement.close();

conn.close();

} catch (e) {

$.response.status = $.net.http.INTERNAL_SERVER_ERROR;

$.response.setBody(e.message);

return;

}

var body = JSON.stringify(output);

$.response.contentType = 'application/json';

$.response.setBody(body);

$.response.status = $.net.http.OK;

}

function CurrentTImeStamp() {

var date = new Date();

var utcout = date.getFullYear() + '-' +

('0' + (date.getMonth() + 1)).slice(-2) + '-' +

('0' + date.getDate()).slice(-2) + 'T' +

('0' + date.getHours()).slice(-2) + ':' +

('0' + date.getMinutes()).slice(-2) + ':' +

('0' + date.getSeconds()).slice(-2);

return (utcout);

}

function CalculateHash(s) {

var chrsz = 8;

var hexcase = 0;

function safe_add(x, y) {

var lsw = (x & 0xFFFF) + (y & 0xFFFF);

var msw = (x >> 16) + (y >> 16) + (lsw >> 16);

return (msw << 16) | (lsw & 0xFFFF);

}

function S(X, n) {

return (X >>> n) | (X << (32 - n));

}

function R(X, n) {

return (X >>> n);

}

function Ch(x, y, z) {

return ((x & y) ^ ((~x) & z));

}

function Maj(x, y, z) {

return ((x & y) ^ (x & z) ^ (y & z));

}

function Sigma0256(x) {

return (S(x, 2) ^ S(x, 13) ^ S(x, 22));

}

function Sigma1256(x) {

return (S(x, 6) ^ S(x, 11) ^ S(x, 25));

}

function Gamma0256(x) {

return (S(x, 7) ^ S(x, 18) ^ R(x, 3));

}

function Gamma1256(x) {

return (S(x, 17) ^ S(x, 19) ^ R(x, 10));

}

function core_sha256(m, l) {

var K = new Array(0x428A2F98, 0x71374491, 0xB5C0FBCF, 0xE9B5DBA5, 0x3956C25B, 0x59F111F1, 0x923F82A4, 0xAB1C5ED5, 0xD807AA98, 0x12835B01,

0x243185BE, 0x550C7DC3, 0x72BE5D74, 0x80DEB1FE, 0x9BDC06A7, 0xC19BF174, 0xE49B69C1, 0xEFBE4786, 0xFC19DC6, 0x240CA1CC, 0x2DE92C6F,

0x4A7484AA, 0x5CB0A9DC, 0x76F988DA, 0x983E5152, 0xA831C66D, 0xB00327C8, 0xBF597FC7, 0xC6E00BF3, 0xD5A79147, 0x6CA6351, 0x14292967,

0x27B70A85, 0x2E1B2138, 0x4D2C6DFC, 0x53380D13, 0x650A7354, 0x766A0ABB, 0x81C2C92E, 0x92722C85, 0xA2BFE8A1, 0xA81A664B, 0xC24B8B70,

0xC76C51A3, 0xD192E819, 0xD6990624, 0xF40E3585, 0x106AA070, 0x19A4C116, 0x1E376C08, 0x2748774C, 0x34B0BCB5, 0x391C0CB3, 0x4ED8AA4A,

0x5B9CCA4F, 0x682E6FF3, 0x748F82EE, 0x78A5636F, 0x84C87814, 0x8CC70208, 0x90BEFFFA, 0xA4506CEB, 0xBEF9A3F7, 0xC67178F2);

var HASH = new Array(0x6A09E667, 0xBB67AE85, 0x3C6EF372, 0xA54FF53A, 0x510E527F, 0x9B05688C, 0x1F83D9AB, 0x5BE0CD19);

var W = new Array(64);

var a, b, c, d, e, f, g, h, i, j;

var T1, T2;

m[l >> 5] |= 0x80 << (24 - l % 32);

m[((l + 64 >> 9) << 4) + 15] = l;

for (var i = 0; i < m.length; i += 16) {

a = HASH[0];

b = HASH[1];

c = HASH[2];

d = HASH[3];

e = HASH[4];

f = HASH[5];

g = HASH[6];

h = HASH[7];

for (var j = 0; j < 64; j++) {

if (j < 16) W[j] = m[j + i];

else W[j] = safe_add(safe_add(safe_add(Gamma1256(W[j - 2]), W[j - 7]), Gamma0256(W[j - 15])), W[j - 16]);

T1 = safe_add(safe_add(safe_add(safe_add(h, Sigma1256(e)), Ch(e, f, g)), K[j]), W[j]);

T2 = safe_add(Sigma0256(a), Maj(a, b, c));

h = g;

g = f;

f = e;

e = safe_add(d, T1);

d = c;

c = b;

b = a;

a = safe_add(T1, T2);

}

HASH[0] = safe_add(a, HASH[0]);

HASH[1] = safe_add(b, HASH[1]);

HASH[2] = safe_add(c, HASH[2]);

HASH[3] = safe_add(d, HASH[3]);

HASH[4] = safe_add(e, HASH[4]);

HASH[5] = safe_add(f, HASH[5]);

HASH[6] = safe_add(g, HASH[6]);

HASH[7] = safe_add(h, HASH[7]);

}

return HASH;

}

function str2binb(str) {

var bin = Array();

var mask = (1 << chrsz) - 1;

for (var i = 0; i < str.length * chrsz; i += chrsz) {

bin[i >> 5] |= (str.charCodeAt(i / chrsz) & mask) << (24 - i % 32);

}

return bin;

}

function Utf8Encode(string) {

string = string.replace(/\r\n/g, "\n");

var utftext = "";

for (var n = 0; n < string.length; n++) {

var c = string.charCodeAt(n);

if (c < 128) {

utftext += String.fromCharCode(c);

} else if ((c > 127) && (c < 2048)) {

utftext += String.fromCharCode((c >> 6) | 192);

utftext += String.fromCharCode((c & 63) | 128);

} else {

utftext += String.fromCharCode((c >> 12) | 224);

utftext += String.fromCharCode(((c >> 6) & 63) | 128);

utftext += String.fromCharCode((c & 63) | 128);

}

}

return utftext;

}

function binb2hex(binarray) {

var hex_tab = hexcase ? "0123456789ABCDEF" : "0123456789abcdef";

var str = "";

for (var i = 0; i < binarray.length * 4; i++) {

str += hex_tab.charAt((binarray[i >> 2] >> ((3 - i % 4) * 8 + 4)) & 0xF) +

hex_tab.charAt((binarray[i >> 2] >> ((3 - i % 4) * 8)) & 0xF);

}

return str;

}

s = Utf8Encode(s);

return binb2hex(core_sha256(str2binb(s), s.length * chrsz));

}

function GetPreviousBlock() {

try {

var conn = $.db.getConnection();

var query = 'select COUNT(*) from "HELLOBLOCK"."HelloBlock.DBARTIFACT::entity.BlockChain"';

var myStatement = conn.prepareStatement(query);

var rs = myStatement.executeQuery();

if (rs.next()) {

var lv_index = rs.getString(1);

var index_int = parseInt(lv_index);

if (index_int < 1) {

var block = [];

block.push('1');

block.push('0000000X0');

} else {

var connhash = $.db.getConnection();

var query = 'select "current_hash" from \"HELLOBLOCK\".\"HelloBlock.DBARTIFACT::entity.BlockChain\" where "index" =?';

var pstmt = connhash.prepareStatement(query);

pstmt.setInteger(1, index_int);

var rec = pstmt.executeQuery();

if (rec.next()) {

var lv_prevhash = rec.getNClob(1);

var block = [];

block.push(lv_index);

block.push(lv_prevhash);

}

}

return (block);

} else {

$.response.status = $.net.http.INTERNAL_SERVER_ERROR;

$.response.setBody('Error in fetching Block Count');

}

} catch (e) {

var an = e.message;

}

}

function AddBlock(pdata, bindex, pbhash) {

var data;

var passed_index = parseInt(bindex);

var passed_pbhash = pbhash.toString();

var prevblock = GetPreviousBlock();

var prev_index = prevblock[0];

prev_index = parseInt(prev_index);

var index = prev_index + 1;

if (prev_index > 1){

data = pdata;

}else {

data = 'Genesis Block';

}

var prevHash = prevblock[1];

var timest = CurrentTImeStamp();

var actual_hash = CalculateHash(index.toString() + data.toString() + timest.toString() + prevHash.toString());

actual_hash = actual_hash.toUpperCase();

//Calling Procedure to add new Block

if (index !== passed_index) {

$.response.status = $.net.http.OK;

$.response.setBody("index is not valid");

} else if (pbhash !== prevHash) {

$.response.status = $.net.http.OK;

$.response.setBody("Previous hash is not valid");

} else {

var conn = $.db.getConnection();

var query = 'call \"HELLOBLOCK"."HelloBlock.Procedure::InsertBlock\"(?,?,?,?,?)';

var myStatement = conn.prepareCall(query);

myStatement.setInteger(1, index);

myStatement.setNClob(2, data);

myStatement.setString(3, timest);

myStatement.setNClob(4, prevHash);

myStatement.setNClob(5, actual_hash);

var rs = myStatement.execute();

conn.commit();

//Calling Procedure to add new Block

// Displaying Whole Block Chain

DisplayBlockChain();

// Displaying Whole Block Chain

}

}

function ValidCall() {

if (typeof BlockData === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter data");

} else if (typeof BlockIndex === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter index");

} else if (typeof PrevBlockHash === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter prevhash");

} else {

AddBlock(BlockData, BlockIndex, PrevBlockHash);

}

}

switch (acmd) {

case "addblock":

ValidCall()

break;

case "chaindisp":

DisplayBlockChain();

break;

default:

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter: " + acmd);

}

Lets understand each function in more details.

function CalculateHash(s) : This function takes plain text as input and gives SHA256 hash of it as output. When calling this function you have to pass text/string as argument in return this function will give you SHA256 form of it.

function ValidCall(): This function basically checks all the input parameter for this xsjs, if you dont pass all parameter it will not allow you to go further . As you can see to test this service you have to pass action , data , index and prevhash parameter without passing all this parameter it will not call function AddBlock(), which is mainly responsible for further validation and adding block and blockchain . I will come to this function later on to get deep drive.

function ValidCall() {

if (typeof BlockData === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter data");

} else if (typeof BlockIndex === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter index");

} else if (typeof PrevBlockHash === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter prevhash");

} else {

AddBlock(BlockData, BlockIndex, PrevBlockHash);

}

}

function CurrentTImeStamp(): This function returns current timestamp .

function CurrentTImeStamp() {

var date = new Date();

var utcout = date.getFullYear() + '-' +

('0' + (date.getMonth() + 1)).slice(-2) + '-' +

('0' + date.getDate()).slice(-2) + 'T' +

('0' + date.getHours()).slice(-2) + ':' +

('0' + date.getMinutes()).slice(-2) + ':' +

('0' + date.getSeconds()).slice(-2);

return (utcout);

}

function DisplayBlockchain(): This function returns all blocks / records in JSON format by selecting Data from Blockchain table .

function DisplayBlockChain() {

try {

var conn = $.db.getConnection();

var output = {

results: []

};

var query = 'select * from "HELLOBLOCK"."HelloBlock.DBARTIFACT::entity.BlockChain"';

var myStatement = conn.prepareStatement(query);

var rs = myStatement.executeQuery();

while (rs.next()) {

var record = {};

record.Index = rs.getInteger(1);

record.Data = rs.getNClob(2);

record.CommitedTime = rs.getString(3);

record.PrevBlockHash = rs.getNClob(4);

record.CurrentHash = rs.getNClob(5);

output.results.push(record);

}

rs.close();

myStatement.close();

conn.close();

} catch (e) {

$.response.status = $.net.http.INTERNAL_SERVER_ERROR;

$.response.setBody(e.message);

return;

}

var body = JSON.stringify(output);

$.response.contentType = 'application/json';

$.response.setBody(body);

$.response.status = $.net.http.OK;

}

function GetPreviousBlock(): It returns the Previous Block , in case of

function GetPreviousBlock() {

try {

var conn = $.db.getConnection();

var query = 'select COUNT(*) from "HELLOBLOCK"."HelloBlock.DBARTIFACT::entity.BlockChain"';

var myStatement = conn.prepareStatement(query);

var rs = myStatement.executeQuery();

if (rs.next()) {

var lv_index = rs.getString(1);

var index_int = parseInt(lv_index);

if (index_int < 1) {

var block = [];

block.push('1');

block.push('0000000X0');

} else {

var connhash = $.db.getConnection();

var query = 'select "current_hash" from \"HELLOBLOCK\".\"HelloBlock.DBARTIFACT::entity.BlockChain\" where "index" =?';

var pstmt = connhash.prepareStatement(query);

pstmt.setInteger(1, index_int);

var rec = pstmt.executeQuery();

if (rec.next()) {

var lv_prevhash = rec.getNClob(1);

var block = [];

block.push(lv_index);

block.push(lv_prevhash);

}

}

return (block);

} else {

$.response.status = $.net.http.INTERNAL_SERVER_ERROR;

$.response.setBody('Error in fetching Block Count');

}

} catch (e) {

var an = e.message;

}

}

function AddBlock(): In this function we have defined our main logic and validation .It checks passing index and previous block hash is correct or not based on that it calculate hash of current block and call the procedure to insert data into table and call function DisplayBlockchain() to display whole chain in JSON format. If anything goes wrong it will throw an error.

function AddBlock(pdata, bindex, pbhash) {

var data;

var passed_index = parseInt(bindex);

var passed_pbhash = pbhash.toString();

var prevblock = GetPreviousBlock();

var prev_index = prevblock[0];

prev_index = parseInt(prev_index);

var index = prev_index + 1;

if (prev_index > 1){

data = pdata;

}else {

data = 'Genesis Block';

}

var prevHash = prevblock[1];

var timest = CurrentTImeStamp();

var actual_hash = CalculateHash(index.toString() + data.toString() + timest.toString() + prevHash.toString());

actual_hash = actual_hash.toUpperCase();

//Calling Procedure to add new Block

if (index !== passed_index) {

$.response.status = $.net.http.OK;

$.response.setBody("index is not valid");

} else if (pbhash !== prevHash) {

$.response.status = $.net.http.OK;

$.response.setBody("Previous hash is not valid");

} else {

var conn = $.db.getConnection();

var query = 'call \"HELLOBLOCK"."HelloBlock.Procedure::InsertBlock\"(?,?,?,?,?)';

var myStatement = conn.prepareCall(query);

myStatement.setInteger(1, index);

myStatement.setNClob(2, data);

myStatement.setString(3, timest);

myStatement.setNClob(4, prevHash);

myStatement.setNClob(5, actual_hash);

var rs = myStatement.execute();

conn.commit();

//Calling Procedure to add new Block

// Displaying Whole Block Chain

DisplayBlockChain();

// Displaying Whole Block Chain

}

}

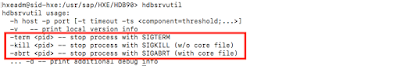

Now Lets go with testing , first i will display the whole Blockchain then i will try to add Block in blockchain and try to manipulate some block also. We will go with Classic Postman .

First will display the entire chain by passing parameter action=chaindisp

So Last Block is 5 , New Block’s Index should be 6

Lets try to push one block into our Blockchain by passing parameter action=addblock , index = 6 , data = nitin->jim and prevhash = current hash of block 5

After hitting the send button , we can see Block 6 just got added.

Now you got an idea Previous block hash plays a major role , if you try to manipulate one block data then hash for that block will get changed and all next block will be in entire chain will be invalid. At the same time if you understood it correctly , many of you have question already that , if any one can pass previous block hash and correct index then He/She will be able to add Block in Blockchain and spam it like anything .You are right this block is not secure and spamming in the block is possible.

Lets Secure it , so no one can spam.

As Blockchain works on Peer-to-Peer network ,it works based on choosing the longest chain rules.

choosing the longest chain – There should always be only one explicit set of blocks in the chain at a given time. In case of conflicts (e.g. two nodes both generate block number 72) we choose the chain that has the longest number of blocks.

The problem now we have

Right now creating block is so easy and quickly and it leads to 3 different problem.

◈ First : People can add blocks incredibly fast and spam our blockchain. A flood of blocks would overload our blockchain and would make it unusable.

◈ Secondly, Because its easy to add valid block in blockchain , so people can tamper one block and re-calculate all other hashes for this entire block will become valid/

◈ And thirdly, As blockchain works in P2P network , now you can combine this two problem to take control over whole blockchain. Blockchains are powered by a peer-to-peer network in which the nodes will add blocks to the longest chain available. So you can tamper with a block, recalculate all the other blocks and then add as many blocks as you want. You will then end up with the longest chain and all the peers will accept it and start adding their own blocks to it.

To solve these all problem , we enter into Proof – of -Work

What is proof-of-work?

Proof-of-work is a mechanism that existed before the first blockchain was created. It’s a simple technique that prevents abuse by requiring a certain amount of computing work. That amount of work is key to prevent spam and tampering. Spamming is no longer worth it if it requires a lot of computing power.

Bitcoin implements proof-of-work by requiring that the hash of a block starts with a specific number of zero’s. This is also called the difficulty. Based on total number of block in blockchain it get increase.

But hang on a minute! How can the hash of a block change? In case of Bitcoin a block contains details about a financial transaction. We sure don’t want to mess with that data just to get a correct hash!

To fix this problem, blockchains add a nonce value. This is a number that gets incremented until a good hash is found. And because you cannot predict the output of a hash function, you simply have to try a lot of combinations before you get a hash that satisfies the difficulty. Looking for a valid hash (to create a new block) is also called “mining” in the cryptoworld.

In case of Bitcoin, the proof-of-work mechanism ensures that only 1 block can be added every 10 minutes. You can imagine spammers having a hard time to fool the network if they need so much compute power just to create a new block, let alone tamper with the entire chain.

Implementing proof-of-work

lets Look at our new Block Structure , I added one more field in table Nonce .

@Catalog.tableType: #COLUMN

Entity BlockChainPOW{

key index : Integer;

data : LargeString;

commit_at : UTCTimestamp;

previous_hash : LargeString;

current_hash: LargeString;

nonce : Integer;

};

Like last time this time also i have created a procedure to add entry into blockchain table

PROCEDURE "HELLOBLOCK"."HelloBlock.Procedure::InsertBlockPOW" (

IN block_index Integer,

IN block_data Nclob,

IN block_commit TIMESTAMP,

IN prevblock_hash Nclob,

IN Currblock_hash Nclob,

IN BlockNonce Integer

)

LANGUAGE SQLSCRIPT

SQL SECURITY INVOKER AS

--DEFAULT SCHEMA <default_schema_name>

-- READS SQL DATA AS

BEGIN

/*************************************

Write your procedure logic

*************************************/

INSERT INTO "HELLOBLOCK"."HelloBlock.DBARTIFACT::entity.BlockChainPOW"("index","data","commit_at","previous_hash","current_hash","nonce")

VALUES (:block_index,:block_data,:block_commit,:prevblock_hash,:Currblock_hash,:BlockNonce);

END

We defined Difficulty as in every 10 block number of Zero will get increase , initially it will 2 (E.G 00FRS4GH….) once number of block is 11 hash’s pattern will be 000FDGHRT…

var difficulty = 2 + (index / 10);

difficulty = parseInt(difficulty);

This Time i have introduced one new function MineBlock , It takes difficulty ,index,data,timestamp and initial hash of block with nonce 0. and it calculates the good hash with valid nonce and give it return back.

function MineBlock(pdifficulty, pindex, pdata, ptimest, prevhash, phash) {

var actdiff = parseInt(pdifficulty);

var actind = parseInt(pindex);

var genhash = phash.toString();

var actnonce = 0;

while (genhash.substring(0, actdiff) !== Array(actdiff + 1).join("0")) {

actnonce++;

genhash = CalculateHash(pindex.toString() + JSON.stringify(pdata.toString() + actnonce.toString()).toString() + ptimest.toString() +

prevhash.toString());

}

var mblock = [];

mblock.push(actnonce);

mblock.push(genhash);

return mblock;

}

Little Modification in function AddBlock() and ValidCall() .

First we look at validcall() then we will move to AddBlock().

This time i have introduced two more parameter nonce and mine . Mine has two mode auto and manual , if you just want to test it and dont want to pass nonce you can choose auto and if you want to pass nonce then mine parameter should be manual . ValidCall() basically validates your input parameter and based on proper input it will call AddBlock().

function ValidCall() {

//jshint maxdepth:5;

/*eslint max-depth: [22, 22]*/

if (typeof BlockData === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter data");

} else if (typeof BlockIndex === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter index");

} else if (typeof PrevBlockHash === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter prevhash");

} else if (typeof MiningOP === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter mine , accepted value auto or manual");

} else if (typeof MiningOP !== 'undefined') {

if (MiningOP !== 'manual'&& MiningOP !== 'auto') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter mine , accepted value auto or manual");

} else if (MiningOP === 'manual') {

if (typeof Nonce === 'undefined'){

$.response.status = $.net.http.OK;

$.response.setBody("In manual mining you have to pass parameter nonce,Better you go with auto because generating nonce is not everyone's cup of tea ");

} else {

AddBlock(BlockData, BlockIndex, PrevBlockHash, Nonce);

}

} else {

AddBlock(BlockData, BlockIndex, PrevBlockHash, 'auto');

}

}

}

Lets Look at AddBlock().

function AddBlock(data, bindex, pbhash, psnonce) {

// var anonce = parseInt(pnonce);

var passed_index = parseInt(bindex);

var passed_pbhash = pbhash.toString();

var prevblock = GetPreviousBlock();

var prev_index = prevblock[0];

prev_index = parseInt(prev_index);

var index = prev_index + 1;

var difficulty = 2 + (index / 10);

difficulty = parseInt(difficulty);

var data = data;

var prevHash = prevblock[1];

var timest = CurrentTImeStamp();

var intnonce = 0;

function bmvalidation() {

//Calling Procedure to add new Block

if (index !== passed_index) {

$.response.status = $.net.http.OK;

$.response.setBody("index is not valid");

} else if (pbhash !== prevHash) {

$.response.status = $.net.http.OK;

$.response.setBody("Previous hash is not valid");

} else {

if (psnonce === 'auto') {

var initial_hash = CalculateHash(index.toString() + JSON.stringify(data.toString() + intnonce.toString()).toString() + timest.toString() +

prevHash.toString());

var minedblock = MineBlock(difficulty, index, data, timest, prevHash, initial_hash);

var minenonce = minedblock[0];

minenonce = parseInt(minenonce);

var minehash = minedblock[1];

minehash = minehash.toUpperCase();

var conn = $.db.getConnection();

var query = 'call \"HELLOBLOCK"."HelloBlock.Procedure::InsertBlockPOW\"(?,?,?,?,?,?)';

var myStatement = conn.prepareCall(query);

myStatement.setInteger(1, index);

myStatement.setNClob(2, data);

myStatement.setString(3, timest);

myStatement.setNClob(4, prevHash);

myStatement.setNClob(5, minehash);

myStatement.setInteger(6, minenonce);

var rs = myStatement.execute();

conn.commit();

//Calling Procedure to add new Block

// Displaying Whole Block Chain

DisplayBlockChain();

// Displaying Whole Block Chain

} else {

var initial_hash = CalculateHash(index.toString() + JSON.stringify(data.toString() + intnonce.toString()).toString() + timest.toString() +

prevHash.toString());

var minedblock = MineBlock(difficulty, index, data, timest, prevHash, initial_hash);

var minenonce = minedblock[0];

minenonce = parseInt(minenonce);

var minernonce = parseInt(psnonce);

var minehash = minedblock[1];

if (minenonce !== minernonce) {

$.response.status = $.net.http.OK;

$.response.setBody("Try with different nonce, your computed nonce is not enough to add block in Blockchain");

} else {

var conn = $.db.getConnection();

var query = 'call \"HELLOBLOCK"."HelloBlock.Procedure::InsertBlockPOW\"(?,?,?,?,?,?)';

var myStatement = conn.prepareCall(query);

myStatement.setInteger(1, index);

myStatement.setNClob(2, data);

myStatement.setString(3, timest);

myStatement.setNClob(4, prevHash);

myStatement.setNClob(5, minehash);

myStatement.setNClob(6, minenonce);

var rs = myStatement.execute();

conn.commit();

//Calling Procedure to add new Block

// Displaying Whole Block Chain

DisplayBlockChain();

// Displaying Whole Block Chain

}

}

}

}

bmvalidation();

}

This time it takes one parameter extra that is nonce , in case of if you pass parameter mine as auto in xsjs service call it auto will be passed as nonce . in case of auto nonce it will first initialize the nonce as 0 and check the difficulty based on the formula we have defined and call MineBlock() , it will get valid hash and nonce and add the block as simple as that , please noted that in real blockchain you can’t send mine parameter as auto , here just for testing and finding nonce is difficult i have made it . In real blockchain to mine a block you have to find Nonce by utilizing your computation power. In case if you send mine parameter as manual then you have to pass nonce parameter and which will be checked with valid nonce , if its same then it will allow you to add a block in blockchain. Now you can see how secure it is , because finding nonce when difficulty level is ultra legend task. Lets putting everything together.

var acmd = $.request.parameters.get("action");

var BlockData = $.request.parameters.get("data");

var BlockIndex = $.request.parameters.get("index");

var PrevBlockHash = $.request.parameters.get("prevhash");

var Nonce = $.request.parameters.get("nonce");

var MiningOP = $.request.parameters.get("mine");

function DisplayBlockChain() {

try {

var conn = $.db.getConnection();

var output = {

results: []

};

var query = 'select * from "HELLOBLOCK"."HelloBlock.DBARTIFACT::entity.BlockChainPOW"';

var myStatement = conn.prepareStatement(query);

var rs = myStatement.executeQuery();

while (rs.next()) {

var record = {};

record.Index = rs.getInteger(1);

record.Data = rs.getNClob(2);

record.CommitedTime = rs.getString(3);

record.PrevBlockHash = rs.getNClob(4);

record.CurrentHash = rs.getNClob(5);

record.Nonce = rs.getInteger(6);

output.results.push(record);

}

rs.close();

myStatement.close();

conn.close();

} catch (e) {

$.response.status = $.net.http.INTERNAL_SERVER_ERROR;

$.response.setBody(e.message);

return;

}

var body = JSON.stringify(output);

$.response.contentType = 'application/json';

$.response.setBody(body);

$.response.status = $.net.http.OK;

}

function CurrentTImeStamp() {

var date = new Date();

var utcout = date.getFullYear() + '-' +

('0' + (date.getMonth() + 1)).slice(-2) + '-' +

('0' + date.getDate()).slice(-2) + 'T' +

('0' + date.getHours()).slice(-2) + ':' +

('0' + date.getMinutes()).slice(-2) + ':' +

('0' + date.getSeconds()).slice(-2);

return (utcout);

}

function CalculateHash(s) {

var chrsz = 8;

var hexcase = 0;

function safe_add(x, y) {

var lsw = (x & 0xFFFF) + (y & 0xFFFF);

var msw = (x >> 16) + (y >> 16) + (lsw >> 16);

return (msw << 16) | (lsw & 0xFFFF);

}

function S(X, n) {

return (X >>> n) | (X << (32 - n));

}

function R(X, n) {

return (X >>> n);

}

function Ch(x, y, z) {

return ((x & y) ^ ((~x) & z));

}

function Maj(x, y, z) {

return ((x & y) ^ (x & z) ^ (y & z));

}

function Sigma0256(x) {

return (S(x, 2) ^ S(x, 13) ^ S(x, 22));

}

function Sigma1256(x) {

return (S(x, 6) ^ S(x, 11) ^ S(x, 25));

}

function Gamma0256(x) {

return (S(x, 7) ^ S(x, 18) ^ R(x, 3));

}

function Gamma1256(x) {

return (S(x, 17) ^ S(x, 19) ^ R(x, 10));

}

function core_sha256(m, l) {

var K = new Array(0x428A2F98, 0x71374491, 0xB5C0FBCF, 0xE9B5DBA5, 0x3956C25B, 0x59F111F1, 0x923F82A4, 0xAB1C5ED5, 0xD807AA98, 0x12835B01,

0x243185BE, 0x550C7DC3, 0x72BE5D74, 0x80DEB1FE, 0x9BDC06A7, 0xC19BF174, 0xE49B69C1, 0xEFBE4786, 0xFC19DC6, 0x240CA1CC, 0x2DE92C6F,

0x4A7484AA, 0x5CB0A9DC, 0x76F988DA, 0x983E5152, 0xA831C66D, 0xB00327C8, 0xBF597FC7, 0xC6E00BF3, 0xD5A79147, 0x6CA6351, 0x14292967,

0x27B70A85, 0x2E1B2138, 0x4D2C6DFC, 0x53380D13, 0x650A7354, 0x766A0ABB, 0x81C2C92E, 0x92722C85, 0xA2BFE8A1, 0xA81A664B, 0xC24B8B70,

0xC76C51A3, 0xD192E819, 0xD6990624, 0xF40E3585, 0x106AA070, 0x19A4C116, 0x1E376C08, 0x2748774C, 0x34B0BCB5, 0x391C0CB3, 0x4ED8AA4A,

0x5B9CCA4F, 0x682E6FF3, 0x748F82EE, 0x78A5636F, 0x84C87814, 0x8CC70208, 0x90BEFFFA, 0xA4506CEB, 0xBEF9A3F7, 0xC67178F2);

var HASH = new Array(0x6A09E667, 0xBB67AE85, 0x3C6EF372, 0xA54FF53A, 0x510E527F, 0x9B05688C, 0x1F83D9AB, 0x5BE0CD19);

var W = new Array(64);

var a, b, c, d, e, f, g, h, i, j;

var T1, T2;

m[l >> 5] |= 0x80 << (24 - l % 32);

m[((l + 64 >> 9) << 4) + 15] = l;

for (var i = 0; i < m.length; i += 16) {

a = HASH[0];

b = HASH[1];

c = HASH[2];

d = HASH[3];

e = HASH[4];

f = HASH[5];

g = HASH[6];

h = HASH[7];

for (var j = 0; j < 64; j++) {

if (j < 16) W[j] = m[j + i];

else W[j] = safe_add(safe_add(safe_add(Gamma1256(W[j - 2]), W[j - 7]), Gamma0256(W[j - 15])), W[j - 16]);

T1 = safe_add(safe_add(safe_add(safe_add(h, Sigma1256(e)), Ch(e, f, g)), K[j]), W[j]);

T2 = safe_add(Sigma0256(a), Maj(a, b, c));

h = g;

g = f;

f = e;

e = safe_add(d, T1);

d = c;

c = b;

b = a;

a = safe_add(T1, T2);

}

HASH[0] = safe_add(a, HASH[0]);

HASH[1] = safe_add(b, HASH[1]);

HASH[2] = safe_add(c, HASH[2]);

HASH[3] = safe_add(d, HASH[3]);

HASH[4] = safe_add(e, HASH[4]);

HASH[5] = safe_add(f, HASH[5]);

HASH[6] = safe_add(g, HASH[6]);

HASH[7] = safe_add(h, HASH[7]);

}

return HASH;

}

function str2binb(str) {

var bin = Array();

var mask = (1 << chrsz) - 1;

for (var i = 0; i < str.length * chrsz; i += chrsz) {

bin[i >> 5] |= (str.charCodeAt(i / chrsz) & mask) << (24 - i % 32);

}

return bin;

}

function Utf8Encode(string) {

string = string.replace(/\r\n/g, "\n");

var utftext = "";

for (var n = 0; n < string.length; n++) {

var c = string.charCodeAt(n);

if (c < 128) {

utftext += String.fromCharCode(c);

} else if ((c > 127) && (c < 2048)) {

utftext += String.fromCharCode((c >> 6) | 192);

utftext += String.fromCharCode((c & 63) | 128);

} else {

utftext += String.fromCharCode((c >> 12) | 224);

utftext += String.fromCharCode(((c >> 6) & 63) | 128);

utftext += String.fromCharCode((c & 63) | 128);

}

}

return utftext;

}

function binb2hex(binarray) {

var hex_tab = hexcase ? "0123456789ABCDEF" : "0123456789abcdef";

var str = "";

for (var i = 0; i < binarray.length * 4; i++) {

str += hex_tab.charAt((binarray[i >> 2] >> ((3 - i % 4) * 8 + 4)) & 0xF) +

hex_tab.charAt((binarray[i >> 2] >> ((3 - i % 4) * 8)) & 0xF);

}

return str;

}

s = Utf8Encode(s);

return binb2hex(core_sha256(str2binb(s), s.length * chrsz));

}

function GetPreviousBlock() {

try {

var conn = $.db.getConnection();

var query = 'select COUNT(*) from "HELLOBLOCK"."HelloBlock.DBARTIFACT::entity.BlockChainPOW"';

var myStatement = conn.prepareStatement(query);

var rs = myStatement.executeQuery();

if (rs.next()) {

var lv_index = rs.getString(1);

var index_int = parseInt(lv_index);

if (index_int < 1) {

var block = [];

block.push('0');

block.push('0000000X0');

} else {

var connhash = $.db.getConnection();

var query = 'select "current_hash" from \"HELLOBLOCK\".\"HelloBlock.DBARTIFACT::entity.BlockChainPOW\" where "index" =?';

var pstmt = connhash.prepareStatement(query);

pstmt.setInteger(1, index_int);

var rec = pstmt.executeQuery();

if (rec.next()) {

var lv_prevhash = rec.getNClob(1);

var block = [];

block.push(lv_index);

block.push(lv_prevhash);

}

}

return (block);

} else {

$.response.status = $.net.http.INTERNAL_SERVER_ERROR;

$.response.setBody('Error in fetching Block Count');

}

} catch (e) {

var an = e.message;

}

}

function MineBlock(pdifficulty, pindex, pdata, ptimest, prevhash, phash) {

var actdiff = parseInt(pdifficulty);

var actind = parseInt(pindex);

var genhash = phash.toString();

var actnonce = 0;

while (genhash.substring(0, actdiff) !== Array(actdiff + 1).join("0")) {

actnonce++;

genhash = CalculateHash(pindex.toString() + JSON.stringify(pdata.toString() + actnonce.toString()).toString() + ptimest.toString() +

prevhash.toString());

}

var mblock = [];

mblock.push(actnonce);

mblock.push(genhash);

return mblock;

}

function AddBlock(data, bindex, pbhash, psnonce) {

// var anonce = parseInt(pnonce);

var passed_index = parseInt(bindex);

var passed_pbhash = pbhash.toString();

var prevblock = GetPreviousBlock();

var prev_index = prevblock[0];

prev_index = parseInt(prev_index);

var index = prev_index + 1;

var difficulty = 2 + (index / 10);

difficulty = parseInt(difficulty);

var data = data;

var prevHash = prevblock[1];

var timest = CurrentTImeStamp();

var intnonce = 0;

function bmvalidation() {

//Calling Procedure to add new Block

if (index !== passed_index) {

$.response.status = $.net.http.OK;

$.response.setBody("index is not valid");

} else if (pbhash !== prevHash) {

$.response.status = $.net.http.OK;

$.response.setBody("Previous hash is not valid");

} else {

if (psnonce === 'auto') {

var initial_hash = CalculateHash(index.toString() + JSON.stringify(data.toString() + intnonce.toString()).toString() + timest.toString() +

prevHash.toString());

var minedblock = MineBlock(difficulty, index, data, timest, prevHash, initial_hash);

var minenonce = minedblock[0];

minenonce = parseInt(minenonce);

var minehash = minedblock[1];

minehash = minehash.toUpperCase();

var conn = $.db.getConnection();

var query = 'call \"HELLOBLOCK"."HelloBlock.Procedure::InsertBlockPOW\"(?,?,?,?,?,?)';

var myStatement = conn.prepareCall(query);

myStatement.setInteger(1, index);

myStatement.setNClob(2, data);

myStatement.setString(3, timest);

myStatement.setNClob(4, prevHash);

myStatement.setNClob(5, minehash);

myStatement.setInteger(6, minenonce);

var rs = myStatement.execute();

conn.commit();

//Calling Procedure to add new Block

// Displaying Whole Block Chain

DisplayBlockChain();

// Displaying Whole Block Chain

} else {

var initial_hash = CalculateHash(index.toString() + JSON.stringify(data.toString() + intnonce.toString()).toString() + timest.toString() +

prevHash.toString());

var minedblock = MineBlock(difficulty, index, data, timest, prevHash, initial_hash);

var minenonce = minedblock[0];

minenonce = parseInt(minenonce);

var minernonce = parseInt(psnonce);

var minehash = minedblock[1];

if (minenonce !== minernonce) {

$.response.status = $.net.http.OK;

$.response.setBody("Try with different nonce, your computed nonce is not enough to add block in Blockchain");

} else {

var conn = $.db.getConnection();

var query = 'call \"HELLOBLOCK"."HelloBlock.Procedure::InsertBlockPOW\"(?,?,?,?,?,?)';

var myStatement = conn.prepareCall(query);

myStatement.setInteger(1, index);

myStatement.setNClob(2, data);

myStatement.setString(3, timest);

myStatement.setNClob(4, prevHash);

myStatement.setNClob(5, minehash);

myStatement.setNClob(6, minenonce);

var rs = myStatement.execute();

conn.commit();

//Calling Procedure to add new Block

// Displaying Whole Block Chain

DisplayBlockChain();

// Displaying Whole Block Chain

}

}

}

}

bmvalidation();

}

function ValidCall() {

//jshint maxdepth:5;

/*eslint max-depth: [22, 22]*/

if (typeof BlockData === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter data");

} else if (typeof BlockIndex === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter index");

} else if (typeof PrevBlockHash === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter prevhash");

} else if (typeof MiningOP === 'undefined') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter mine , accepted value auto or manual");

} else if (typeof MiningOP !== 'undefined') {

if (MiningOP !== 'manual'&& MiningOP !== 'auto') {

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter mine , accepted value auto or manual");

} else if (MiningOP === 'manual') {

if (typeof Nonce === 'undefined'){

$.response.status = $.net.http.OK;

$.response.setBody("In manual mining you have to pass parameter nonce,Better you go with auto because generating nonce is not everyone's cup of tea ");

} else {

AddBlock(BlockData, BlockIndex, PrevBlockHash, Nonce);

}

} else {

AddBlock(BlockData, BlockIndex, PrevBlockHash, 'auto');

}

}

}

switch (acmd) {

case "addblock":

// AddBlock(BlockData, BlockIndex, PrevBlockHash, 'auto');

ValidCall();

break;

case "chaindisp":

DisplayBlockChain();

break;

default:

$.response.status = $.net.http.OK;

$.response.setBody("Pass Parameter: " + acmd);

}

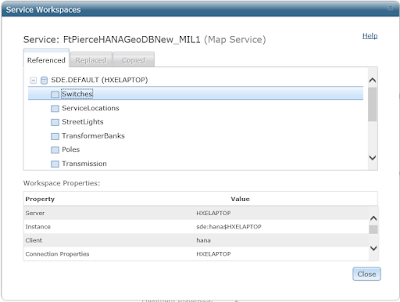

lets test it with classic postman.

Now you can see the Pattern of hash , and it returned Nonce. Lets try to add one block using random nonce.

Look at the response . Now we will try to add block automatically to see what is the valid hash and nonce for this block.

Block got added with valid nonce 385 , in my example to keep it simple i defined easy difficulty but in real use case it will be too complex and hard.

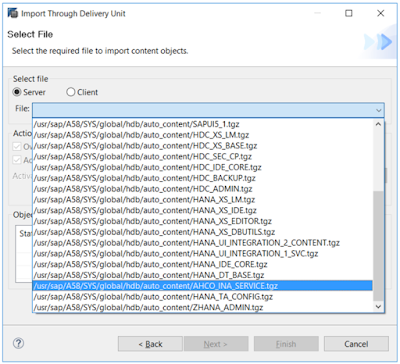

The Whole Project (HelloBlock) is available

On Github. You can import it as delivery unit also in that case you have to assign role to your user to execute it. I hope you like this blog , new idea ,suggestions are always welcome. In next Blog we will see how we can build end to end Blockchain application on supply chain use case using SAP Cloud Platform.