When I help partners set up their HANA Express servers for development use, I want to configure them to impersonate the behavior of Cloud Foundry so that they get used to how the XSA generated URLs work. This approach also tends to promote a more deployment target agnostic behavior and leads to projects that deploy into both HANA XSA on-prem and Cloud Foundry environments without any code differences.

Note: In this post, I’m using the fully qualified domain name for my server’s name as hxe.local.com. You will want to replace this name throughout the example commands in this post with your choice for a server name.

The primary problem is that the HANA Express(HXE) installer assumes ports routing mode. In ports routing mode, applications provided by the XSA system get urls like this.

https://hxe.local.com:53075

URLs in Cloud Foundry(CF) look like this.

https://webide.cfapps.us10.hana.ondemand.com

While I can’t (currently) cause apps deployed into CF to use my own domain name, I can configure HXE to create URLs in a similar way by installing it with hostnames routing mode which will result in a URL like this.

https://webide.hxe.local.com

Note that while I’m making an HXE install behave more like CF, I’m not duplicating other aspects of CF like stubbing up all the same services that CF makes available. I’ll save that exercise for another blog post.

Another aspect that I can configure in HXE to make it behave more like CF is to define a trust relationship with an external Identity Provider(IdP) and use it as the source of the application’s users. By default, HXE will use the local HANA DB as a store of applications users and in fact this is how the XSA_ADMIN, XSA_DEV, and other utility users are defined. However, I try to promote the use of an external IdP and HANA SPS03 now allows you to configure your app’s xsuaa service instance to skip the normal (DB defined user) login page and redirect the user directly to external IdP’s login page seamlessly. Again, this is fodder for another blog post so I won’t cover it here.

So the main point of this post is to force the HXE installer to hostname routing mode and as a bonus show you how to use real (or at least your own) certificates so that your browser doesn’t complain constantly about your HXE server.

These instructions assume that you’ll be installing on a Linux X86 based VM and compatible OS distribution. You can use AWS, or Azure, or GCP, or your own laptop to provide this, so I won’t go into detail. I will advise you to use a 32GB image or hardware that has 32GB of RAM or larger. You may be able to get by with 16GB, but if you try to do it in 16GB, I’d advise to make sure you’ve also got 16GB of swap available and that you install things a little at a time (by breaking up the installer script) and turning things off as you go.

Also, I’m going to be assuming OpenSuSe with the Leap 42.3 repos. YMMV.

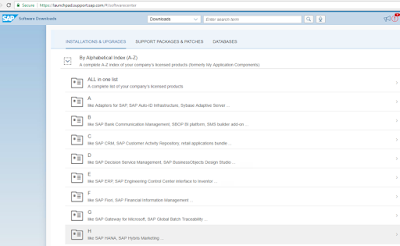

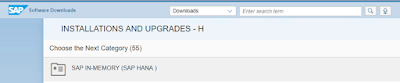

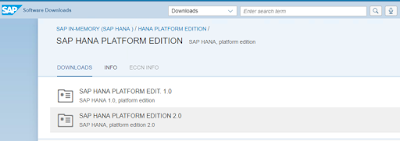

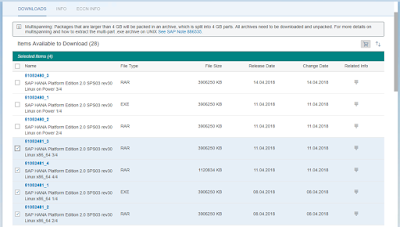

With this in mind go get the binary installer files by signing up for HXE and using the latest downloader.

Note: This post is specific to the HXE installer distributed in April, 2018 with version 2.00.030…

Once you’ve got the tgz files, use SCP to move them to your VM or server.

SSH into your VM as root (or become root) and do some updating before we start installing anything.

Make sure the system libs are up to date.

zypper repos

zypper refresh

zypper update

Run update again to be sure.

zypper update

Loading repository data...

Reading installed packages...

Nothing to do.

Reboot to let the new versions of shared libs take hold.

shutdown -r now

Check that /etc/host has a Fully Qualified Domain Name(FQDN) for this host pointing to it’s loopback address.

cat /etc/hosts | grep hxe.local.com

127.0.0.1 localhost hxe.local.com

The DNS resolution mechanism needs to be able to resolve ANY hostname in your machine’s domain. This can be accomplished in two ways. If this server is on the public internet and you have control over the administration of your domain, you can use your domain provider’s tools to set up both an “A” record and a “CNAME” record to accomplish this.

hxe IN A 123.45.67.123

.hxe IN CNAME hxe.local.com

As long as requests made to the server’s external IP address get redirected back to it’s internal address, this will work.

If however, you are installing within a VM on real hardware or Docker or on a server that is on a NAT protected sub-network, you will need to find a way to locally override the external DNS resolution and provide for wildcard DNS matching for any hostname in your machine’s domain.

The easiest tool I’ve found to accomplish this is dnsmasq.

Install DNSMasq using the SuSE package manager.

zypper in dnsmasq

Edit the DNSMasq config file to add an address entry for your host’s domain name.

vi /etc/dnsmasq.conf

Find the right section by searching for “double-click” and duplicate and uncomment and adjust (don’t forget the “.”)

...

address=/.hxe.local.com/127.0.0.1

...

Save your file (with VI, esc + :wq)

Restart dnsmasq to affect the change.

service dnsmasq restart

Verify with dig pointing to local DNS server(dnsmasq)

dig hxe.local.com @127.0.0.1

...

;; ANSWER SECTION:

hxe.local.com. 0 IN A 127.0.0.1

...

Verify other wildcard variations.

dig abc.hxe.local.com @127.0.0.1

Adjust the host’s DNS resolution order to use the local server first before others.

vi /etc/sysconfig/network/config

...

NETCONFIG_DNS_STATIC_SERVERS="127.0.0.1"

...

Trigger a rebuild of the /etc/resolv.conf file.

netconfig update -f

Double check that the /etc/resolv.conf file got generated properly. The first nameserver line should be 127.0.0.1

cat /etc/resolv.conf

...

search us-west-1.compute.internal

nameserver 127.0.0.1

nameserver xxx.xx.xx.xxx

Test the default DNS search order by not specifying a server with dig.

dig hxe.local.com

...

;; ANSWER SECTION:

hxe.local.com. 0 IN A 127.0.0.1

...

And other variations.

dig abc.hxe.local.com

dig xyz.hxe.local.com

They should all resolve to the same server address as before.

Verify with a ping to be sure the search order is being followed.

ping hxe.local.com

PING localhost (127.0.0.1) 56(84) bytes of data.

64 bytes from localhost (127.0.0.1): icmp_seq=1 ttl=64 time=0.016 ms

64 bytes from localhost (127.0.0.1): icmp_seq=2 ttl=64 time=0.021 ms

64 bytes from localhost (127.0.0.1): icmp_seq=3 ttl=64 time=0.019 ms

^C

ping abc.hxe.local.com

Enable the dnsmasq daemon to start at system startup.

chkconfig dnsmasq on

Created symlink from /etc/systemd/system/multi-user.target.wants/dnsmasq.service to /usr/lib/systemd/system/dnsmasq.service.

systemctl enable dnsmasq.service

systemctl start dnsmasq.service

Verify it’s set to start on startup.

systemctl list-unit-files | grep dnsmasq

dnsmasq.service enabled

REALLY check that it works after a reboot!

shutdown -r now

After your server comes back up and you can ssh in as root.

ping anything.hxe.local.com

OK, now we’ve proven that our server can resolve any hostname in our FQDN’s domain, we can proceed to the installation. If you haven’t proven that this is the case, DO NOT CONTINUE! Go back and correct the DNS resolution until it’s working or the installation WILL fail.

Based on where you copied the installation files on your server, you will need to unpack them in a convenient place. I like to use a top level folder called “install”. If you use a different place or name, adjust these commands as needed. I also copied the files on my server into the /var/tmp folder so again, adjust as needed for your situation.

Unpack the HXESPS03 installer files

cd /install

tar xzvf /var/tmp/hxe.tgz

tar xzvf /var/tmp/hxexsa.tgz

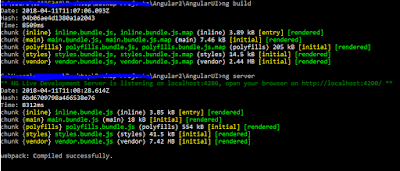

The HXE installer scripts are hard-coded to use port routing mode. Since we want to use hostnames routing mode, I created a script that adjusts the stock HXE installer scripts so that hostnames mode is specified. It also allows you to change the org and development space names that by default get created as HANAExpress and development.

Git clone this script repo into the /install folder.

git clone https://github.com/alundesap/hxe_installer_scripts.git

Move the script(s) to the current folder

mv hxe_installer_scripts/* .

Remove the repo folder

rm -rf hxe_installer_scripts/

Run the prep4hostnames.sh script and enter your specifics

./prep4hostnames.sh

Enter fully qualified host name: hxe.local.com

Enter organization name: MyHXE

Enter development space name: DEV

Use the screen command because the install takes a while and will fail if you loose connection otherwise.

screen

Ctrl-A + d to disconnect from screen

screen -R to reconnect

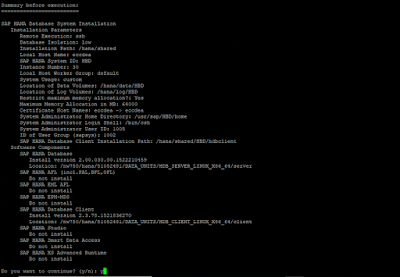

Run the setup script.

./setup_hxe.sh

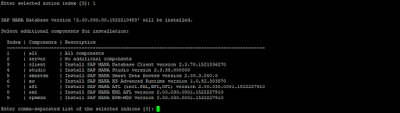

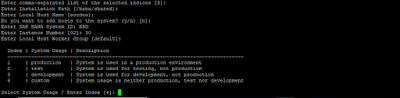

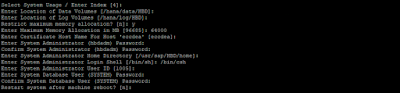

Use defaults except…

instance number = 00

When prompted: Enter component to install:, Select server. I’ve found that the installer will sometimes fail when all is selected. I find it’s better to let the server only installation finish and sit for about 15 minutes before re-running the installation script.

local host name = hxe.local.com

password = <secret>

Expect this process to take around 15 minutes to complete. Once completed, wait about another 15 minutes for the machine to settle before re-running the install script and installing XSA(don’t ask me why).

Rerun the install script. Answer the prompts with the same values you did the first time.

./setup_hxe.sh

When you get to this prompt answer yes.

Detected server instance HXE without Extended Services + apps (XSA)

Do you want to install XSA? (Y/N): Y

XSA install take on the order of 90 minutes to complete. This is a good time to walk the dog, get lunch/dinner, etc.

You can monitor what processes are listening on what ports with this command in another shell (as root).

while sleep 5; do clear; date; lsof -n -i -P | grep LISTEN | grep -v 127.0.0.1 | sort --key=9.3,9.7; done

Once you see a process running on 30033, XS-A has started up and is installing content.

sapwebdis 1467 hxeadm 12u IPv4 294103 0t0 TCP *:30033(LISTEN)

Once you see this line of output, you can open a new console and become hxeadm and login as XSA_ADMIN and watch the remaining install of XS-A components.

[37] XS Controller API available at 'https://api.hxe.local.com:30033'. Creating a connection to this URL using the XS Commandline Client should get you started.

su - hxeadm

xs api https://api.hxe.local.com:30033/ --skip-ssl-validation

xs login -u XSA_ADMIN -p <secret> -o MyHXE -s SAP

If you list all the apps running in the SAP space you’ll see that they all now use port 30033.

xs apps

This is the default port when using hostnames routing mode. You can change this to the default 443 SSL port so that you won’t have to specify a port at all. The next set of steps accomplishes this.

Note that you should check to see that no other webserver process is already using port 443 before continuing.

Be sure the you are running as hxeadm.

The following are HANA sql command line commands that execute single commands. The first one just show the contents of the xscontroller.ini configuration table. We need to change some settings and then verify they are correct in the database before continuing.

hdbsql -u SYSTEM -p <secret> -i 00 -d SYSTEMDB "SELECT * FROM M_INIFILE_CONTENTS WHERE FILE_NAME='xscontroller.ini'"

FILE_NAME,LAYER_NAME,TENANT_NAME,HOST,SECTION,KEY,VALUE

...

"xscontroller.ini","SYSTEM","","","communication","api_url","https://api.hxe.local.com:30033"

"xscontroller.ini","SYSTEM","","","communication","default_domain","hxe.local.com"

"xscontroller.ini","SYSTEM","","","communication","internal_https","true"

"xscontroller.ini","SYSTEM","","","communication","router_https","true"

"xscontroller.ini","SYSTEM","","","communication","routing_mode","hostnames"

...

Press "q" to exit

Set the router_port to 443.

hdbsql -u SYSTEM -p <secret> -i 00 -d SYSTEMDB "alter system alter configuration('xscontroller.ini','SYSTEM') SET ('communication','router_port') = '443' with reconfigure"

Set the listen_port to 443.

hdbsql -u SYSTEM -p <secret> -i 00 -d SYSTEMDB "alter system alter configuration('xscontroller.ini','SYSTEM') SET ('communication','listen_port') = '443' with reconfigure"

Set the api_url to include 443.

hdbsql -u SYSTEM -p <secret> -i 00 -d SYSTEMDB "alter system alter configuration('xscontroller.ini','SYSTEM') SET ('communication','api_url') = 'https://api.hxe.local.com:443' with reconfigure"

Review all the settings to make sure they are correct.

hdbsql -u SYSTEM -p <secret> -i 00 -d SYSTEMDB "SELECT * FROM M_INIFILE_CONTENTS WHERE FILE_NAME='xscontroller.ini'"

FILE_NAME,LAYER_NAME,TENANT_NAME,HOST,SECTION,KEY,VALUE

...

"xscontroller.ini","SYSTEM","","","communication","api_url","https://api.hxe.local.com:443"

"xscontroller.ini","SYSTEM","","","communication","default_domain","hxe.local.com"

"xscontroller.ini","SYSTEM","","","communication","internal_https","true"

"xscontroller.ini","SYSTEM","","","communication","listen_port","443"

"xscontroller.ini","SYSTEM","","","communication","router_https","true"

"xscontroller.ini","SYSTEM","","","communication","router_port","443"

"xscontroller.ini","SYSTEM","","","communication","routing_mode","hostnames"

"xscontroller.ini","SYSTEM","","","communication","single_port","true"

"xscontroller.ini","SYSTEM","","","general","embedded_execution_agent","false"

"xscontroller.ini","SYSTEM","","","persistence","hana_blobstore","true"

Press "q" to exit

Stop the HANA processes.

./HDB stop

Drop out of hxeadm back to root user.

exit

Now, as root complete these steps. What we’re doing here is replace the icmbnd executable with one that can bind to a port that is below 1000 and 443 is such a port so it requires root permission to do so.

cd /hana/shared/HXE/xs/router/webdispatcher

cp icmbnd.new icmbnd

chown root:sapsys icmbnd

chmod 4750 icmbnd

ls -al icmbnd

The last line should show the icmbnd file with these permissions.

-rwsr-x--- 1 root sapsys 2066240 May 4 04:03 icmbnd

Completely reboot the server so that all the processes change from 30033 to 443.

shutdown -r now

After reboot verify that things are now correct.

If you want to watch server processes and the ports they are binding to, use this command loop as root. Ctrl-C to break the loop.

while sleep 5; do clear; date; lsof -n -i -P | grep LISTEN | grep -v 127.0.0.1 | sort --key=9.3,9.7; done

You should notice that there is no longer a process binding to port 30033, but now one on 443.

sapwebdis 4764 hxeadm 14u IPv4 56676 0t0 TCP *:443 (LISTEN)

Break the above loop with Ctrl-C.

Now check that things look with the xs command. First become the hxeadm user.

su - hxeadm

Reset the xs api command since it will still think that it needs to be using port 30033.

xs api https://api.hxe.local.com:443/ --cacert=/hana/shared/HXE/xs/controller_data/controller/ssl-pub/router/default.root.crt.pem

Re-login as XSA_ADMIN.

xs login -u XSA_ADMIN -p <secret> -o MyHXE -s SAP

You should now see that all the apps running in the SAP space no longer have port 30033 but no port designation now. This is because 443 is the default SSL port.

Here is the line for the WebIDE app.

webide STARTED 1/1 512 MB <unlimited> https://webide.us.sap-a-team.com

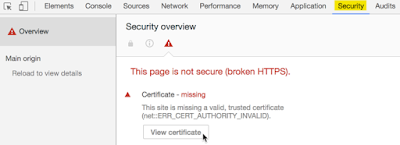

If you browse to the API endpoint, you will be greeted with the dreaded invalid certificate authority warning. This is due to the fact that during the installation, the installer used a self-signed certificate that isn’t known to any of the browser’s built in certificate authorities.

https://webide.hxe.local.com/

Even if you choose to create an exception for this certificate in your browser, you’ll find that there are a few things that just don’t work properly. It’s best to use a certificate that your local system really trusts. This can be accomplished in one of two ways.

The easiest and most inconvenient and costly way is to go to a SSL certificate provider and purchase a wildcard SSL certificate for your server’s fully qualified domain name. In the US, I use GoDaddy, and a quick check of their website shows that a certificate like this will cost me at least $300 per year

The biggest advantage of this approach is that ANY browser will already have GoDaddy’s certificate authority certs loaded and trusted so this purchased cert will be immediately recognized as secure and you will have to do this for production servers.

The disadvantages are costs, proving you are the rightful owner of the domain name, time it takes to purchase and install, and the fact that it will expire and you’ll have to renew and re-install an updated one in time.

Another way to go is to proclaim yourself as a valid and trusted certificate authority in your own right! That way, you can generate your own certificates as you need them and you can set them to expire a long time from now and it doesn’t cost you anything more than your time and effort.

The downside is that nobody trusts you. However, we can force them to trust you manually by requesting that they install and trust your certificate authority(CA) certificate and your intermediate certificate. Since you’re likely to have only a handful of client machine’s you are using for development and testing (if this server is intended for production use, you’ll have to pay for a real certificate) you will only need to trust your CA on a few machines. Since it’s you that’s doing it, you trust yourself not to be malicious don’t you?

I’ve created a set of scripts that you’ll need to run as root to accomplish this. I’m not going to get into the details of what they are doing. If you’re interested, just inspect the scripts themselves. I want to give Jamie Nguyen props for his excellent info that inspired these scripts.

OpenSSL Certificate Authority

Shell into your server and be sure to be the root user.

We will create a folder in the /root folder so change into that folder.

cd /root

Now git clone this repo.

git clone https://alunde@bitbucket.org/alunde/ca.git

This will create a folder inside /root called ca. Change into that folder.

cd ca

We will need to create the top-level certificate authority certificate first. When you are prompted to create a passphrase, use something that is non-trival and really make a note of it in a secure place.

./1_gen-root-key-cert

You’ll be prompted for information about your company, location, etc. Just fill in reasonable values that you’ll recognize when you inspect the certificate.

You use this certificate to “sign” an intermediate certificate so let’s create that one with the next script. Again be careful when you’re asked to provide the passphrase for the CA certificate you just created and when you’re asked to create a passphrase for the intermediate certificate we’re creating now. I like to use the same passphrase for the intermediate certificate, but this is up to you. As before, keep this passphrase super secret.

./2_gen-intermediate-key-cert

Again you’ll be prompted for info about your company, etc. Enter values as before.

Now change into the intermediate folder for the real work of creating the certificate that we’ll use for our server.

cd intermediate

Now run the script to generate the SSL certificate for the server.

./gen-xsa-ca-ssl-cert

You’ll prompted to enter your server’s fully qualified host name.

Enter fully qualified host name: hxe.local.com

The script then reminds you that when you are prompted for the Common Name to add an asterix(*.) to the front of your server’s host name. This is very important to do correctly or the certificate won’t be generated properly.

When prompted for Common Name []: use this *.hxe.local.com

You are also reminded to use the passphrase you created when you created the intermediate certificate in step #2 above.

Enter “Y” to continue.

----

Country Name (2 letter code) [US]:

State or Province Name [State]:

Locality Name []:

Organization Name [My Corp, Inc.]:

Organizational Unit Name []:

Common Name []:*.hxe.local.com

Email Address [info@local.com]:

You’ll see a lot of output describing the steps the script is taking and the output of inspecting the results so that you can verify that the certificate was created properly. The resulting certificate is stored in the certs sub-folder and the key file that goes with it is stored in the private sub-folder. The script also hints at the steps needed to install the certificate into the XSA system. Since we have already shifted our server from port 30033 to port 443, you’ll need to adjust the command slightly.

cd /root/ca/intermediate/

/hana/shared/HXE/xs/bin/xs api https://api.hxe.local.com:443 --skip-ssl-validation

/hana/shared/HXE/xs/bin/xs login -u XSA_ADMIN -p <secret> -s SAP

/hana/shared/HXE/xs/bin/xs set-certificate hxe.local.com -k private/hxe.local.com.key -c certs/hxe.local.com.pem

We need to restart the HANA system for the new certificate to take hold.

While we’re waiting, we can also replace the self-signed certificate that the XS classic web dispatcher uses as well. This isn’t critical unless you’re using the older XS scripting engine.

Here’s the steps I use to accomplish this.

As the root user. Copy the certs to the sec folder.

cd /hana/shared/HXE/HDB00/demo.isdev.net/sec

cp SAPSSLS.pse SAPSSLS_pse.bak

cp /root/ca/intermediate/certs/ca-chain.cert.pem .

cp /root/ca/intermediate/certs/hxe.local.com.cert.pem .

cp /root/ca/intermediate/private/hxe.local.com.key .

Now become the hxeadm user and generate the new pse file. Make sure to enter a blank passphrase.

su - hxeadm

cd /hana/shared/HXE/HDB00/hxe.local.com/sec

sapgenpse import_p8 -p hana2sp03.pse -r ca-chain.cert.pem -c hxe.local.com.cert.pem hxe.local.com.key

Copy the newly generated one over the one the system uses. Then restart HANA.

cp hana2sp03.pse SAPSSLS.pse

cd /usr/sap/HXE/HDB00

./HDB stop ; ./HDB start

When the HDB start command finishes, it’s important to remember that most of the XSA applications are started in the background. This means it can take some time before they are all fully functional. If you start interacting with the system before this, you may likely see issues so it’s best to wait for everything to become fully functional.

How long you might ask? Well you can watch things to be sure. First as root, watch to see when the 443 port is bound to a process. We did this above but I’ll put the command here again. Ctrl-C to break out of the loop.

while sleep 5; do clear; date; lsof -n -i -P | grep LISTEN | grep -v 127.0.0.1 | sort --key=9.3,9.7; done

Once you see that, become the hxeadm user and set the api to use the new certificate and port 443 and then login and check the state of the apps running in the SAP space.

su - hxeadm

https://api.hxe.local.com:443/ --cacert /hana/shared/HXE/xs/controller_data/controller/ssl-pub/router/default.root.crt.pem

xs login -u XSA_ADMIN -p <secret> -s SAP

Now check the running apps.

xs apps

The second column will be either STARTED or STOPPED for each app. This is deceiving until you notice the column header is “requested state” not “actual state”. The apps that actually have started completely are those where the number of instances started is equal to the number of instances requested. So initially in the third column you’ll see a lot of 0/1’s. Keep running the xs a command until you see those with STARTED as 1/1. Again, this will take some time but keep checking back.

webide STARTED 1/1 512 MB <unlimited> https://webide.hxe.local.com

While we are waiting, we can check the info screen of the api endpoint in our browser. Browse to this URL.

https://api.hxe.local.com/v2/info

But wait a second you say! My browser is still showing that it’s not secure!

If you’re using Chrome browser and you fire up the developer tool window and select the security tab where you can inspect the details of the certificate.

These (blurred) values should match what you entered when creating the certificate authority certificate above.

But it’s still not trusted…

That’s because we haven’t yet told your system to trust certificates that we create with our own CA. To do this we must first copy the ca certificate and the intermediate certificate to our local system.

Copy the ca.cert.pem and intermediate.cert.pem files from your server to the local system. I’ll use scp, but if you don’t have a better way, you can always dump the contents of the pem files with the cat command and then just cut/paste them into text files on your local system.

scp hxe.local.com:/root/ca/certs/ca.cert.perm .

scp hxe.local.com:/root/ca/intermediate/certs/intermediate.cert.pem .

Now that you have local copies, import them into your local system’s trust store. This varies by system type but on Mac you’ll use the Keychain Access application and set the CA cert to “Always Trust” and on Windows you’ll use the Internet Options system tool’s Content tab Certificates button to import them into Trusted Root Certification Authorities and Intermediate Certification Authorities respectively. You’ll need local admin rights to do this and if your system is locked down by IT, you’ll have to get them to do it for you or you’re just plain out of luck.

Once this is done, it’s time to test. Load the api URL as before and see that the location bar has a happy green lock icon now. If it doesn’t, you may have to quit your browser and restart it as some browsers hang onto certificates for a while even when a new one is available.

You should now be able to get to the WebIDE without any issues of the browser complaining (assuming it’s had enough time to get started by now).

Note: Remember to login with the XSA_DEV user not the XSA_ADMIN user when accessing the WebIDE as the XSA_DEV user has the proper role collections assigned to it.

https://webide.hxe.local.com

Let’s also confirm that the XS-Classic web dispatcher has it’s certificate correctly set. Browse to this URL to test that it also has a happy green lock icon in the browser’s location bar.

https://hxe.local.com:4300/

You should see this screen.