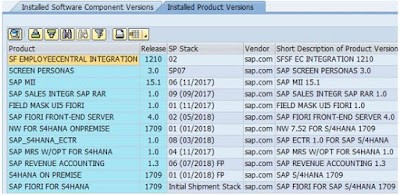

The SAP HANA intelligent data platform has been available for eight years, and with each passing year, we continue to see more and more innovation. You can expect the same in the next few weeks with the release of HANA 2.0 SPS04. However, I wanted to take an opportunity to step back and have a look at some “basics” of SAP HANA – what made it different back in 2011 and how this difference continues to add value in 2019 and beyond. This is part one of a two-part series, with another blog planned for next week. For now, I’ll focus on the in-memory and columnar structure of SAP HANA as well as the value it enables via a virtual data model.

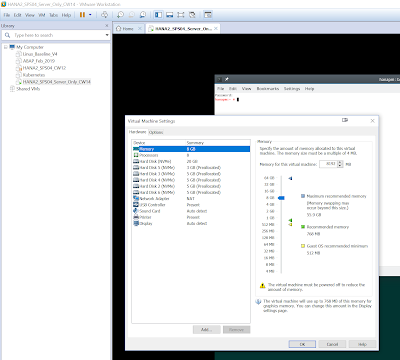

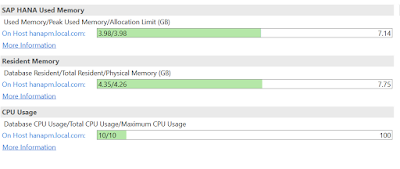

I do not intend to get into the technical detail and internals of SAP HANA as there is plenty of help documentation for that. It’s worth mentioning again that SAP HANA pioneered the concept of having a single copy of data in an in-memory and columnar structure and continues to be the market leader in this space. The “single copy” part of my statement is what truly differentiates SAP HANA, meaning we don’t have to transact in a row-based structure and then re-persist the same data in a column-based structure creating redundancy and latency. With the technology advancements in SAP HANA, we’re able to transact directly on the in-memory columnar structure and immediately reap the benefits. This enables optimal performance for both transactional and analytic workloads. Even if we don’t transact directly on SAP HANA, it can handle the load of transactional replication. This means that we can do real-time replication from other databases into this in-memory columnar structure without having to batch up the data with an ETL tool like in the past (though we can do that too if you’d like; perhaps because the source can’t handle the load). Ultimately, the in-memory columnar technology is a key enabler for application advancements like SAP S/4HANA and SAP BW/4HANA, but it also has merit outside those applications which is what I will discuss next.

In the first 6-9 months of SAP HANA being generally available, and before BW on HANA came along, there really was just one use case: SAP HANA as a data mart solution. Sure, we gave it some other names like “side-car,” agile data mart, operational reporting, and application accelerator, but in the end all of these rely on loading data into the platform, modeling the data into a nice structure for analytics, and then connecting either an application or a BI tool to read the data. That sounds easy enough in concept, but what was different was how we were able to accomplish this with SAP HANA. To explain that part, I’m going to try to use the same analogy I’ve been using since 2012…

This was a true labor of love, and in some ways I miss it. Over time it got a little easier with the introduction of dual cassette decks, the CD, the MP3, and Napster (I admit no wrong doing)…but the game totally changed in 2001 when Apple introduced iTunes and the iPod. Ever since then, I can easily buy my music online and simply drag and drop it into a playlist that automagically syncs to my device. Importantly for my analogy, the digital playlist isn’t making physical copies of the songs but rather simply stores pointers to the one copy in the library or on the device. This allows an easy adjustment at the click of a button if I decide my taste has changed. I may not be making playlists for the same themes as my old mix tapes, but it’s sure easy to maintain my ‘Running Music’ and ‘Kids Songs’ these days.

In-Memory and Columnar

I do not intend to get into the technical detail and internals of SAP HANA as there is plenty of help documentation for that. It’s worth mentioning again that SAP HANA pioneered the concept of having a single copy of data in an in-memory and columnar structure and continues to be the market leader in this space. The “single copy” part of my statement is what truly differentiates SAP HANA, meaning we don’t have to transact in a row-based structure and then re-persist the same data in a column-based structure creating redundancy and latency. With the technology advancements in SAP HANA, we’re able to transact directly on the in-memory columnar structure and immediately reap the benefits. This enables optimal performance for both transactional and analytic workloads. Even if we don’t transact directly on SAP HANA, it can handle the load of transactional replication. This means that we can do real-time replication from other databases into this in-memory columnar structure without having to batch up the data with an ETL tool like in the past (though we can do that too if you’d like; perhaps because the source can’t handle the load). Ultimately, the in-memory columnar technology is a key enabler for application advancements like SAP S/4HANA and SAP BW/4HANA, but it also has merit outside those applications which is what I will discuss next.

Virtual Data Model

In the first 6-9 months of SAP HANA being generally available, and before BW on HANA came along, there really was just one use case: SAP HANA as a data mart solution. Sure, we gave it some other names like “side-car,” agile data mart, operational reporting, and application accelerator, but in the end all of these rely on loading data into the platform, modeling the data into a nice structure for analytics, and then connecting either an application or a BI tool to read the data. That sounds easy enough in concept, but what was different was how we were able to accomplish this with SAP HANA. To explain that part, I’m going to try to use the same analogy I’ve been using since 2012…

This may miss the mark with some of my millennial and Gen Z friends, but growing up in the 80s and 90s (Xennials rule!) I was no stranger to the “mix tape.” If I wanted to make a compilation of ‘Love Songs’ for my girlfriend or ‘Raver’s Beats’ for my buddies, it was a big effort. The steps were something like the following:

| Mix Tape | |

| 1 | Identify the theme |

| 2 | Determine songs and order – this also involved a calculation of the cassette duration and what could fit |

| 3 | Get the songs – usually recorded onto a raw tape from the radio by lining up the microphone of my boombox with the speakers of my brother’s boombox (and yes, we called the radio station to request the songs) |

| 4 | Copy the songs from the raw tape onto the mix tape in the proper order – being careful to maintain time between songs, etc. |

| 5 | Rework over and over until I like the result |

| 6 | Hand write the insert for the cassette – with “correction fluid” to help me out based on #4 (As a side note, did you know Wite-Out [or Tipp-Ex for my European friends] and Liquid Paper are still selling well?) |

| 7 | Deliver the finished product |

This was a true labor of love, and in some ways I miss it. Over time it got a little easier with the introduction of dual cassette decks, the CD, the MP3, and Napster (I admit no wrong doing)…but the game totally changed in 2001 when Apple introduced iTunes and the iPod. Ever since then, I can easily buy my music online and simply drag and drop it into a playlist that automagically syncs to my device. Importantly for my analogy, the digital playlist isn’t making physical copies of the songs but rather simply stores pointers to the one copy in the library or on the device. This allows an easy adjustment at the click of a button if I decide my taste has changed. I may not be making playlists for the same themes as my old mix tapes, but it’s sure easy to maintain my ‘Running Music’ and ‘Kids Songs’ these days.

While that was a fun little diversion to the 80s/90s for me, there must be a reason I brought it up in the context of SAP HANA. In my mind, the 2011 introduction of SAP HANA to the world of business data was similar to the introduction of iTunes to the consumer music world in 2001. Think about it for a minute from a data and business analytics perspective. I can easily draw a comparison between the steps for my “mix tape” and the high-level steps involved in a business analytics project:

| Mix Tape | Business Analytics | ||

| 1 | Identify the theme | > | Identify the business domain/process |

| 2 | Determine songs and order | > | Determine the necessary data sets (in many cases specific fields) |

| 3 | Get the songs | > | Get the data (often an arduous effort of batch extraction) |

| 4 | Copy the songs from the raw tape onto the mix tape in the proper order | > | Restructure the data for consumption (layers of persisted transformations) |

| 5 | Rework over and over until I like the result | > | Rework (the business needs will inevitably evolve as the output is better understood) |

| 6 | Hand write the insert for the cassette | > | Document the solution |

| 7 | Deliver the finished product | > | Deliver the finished product |

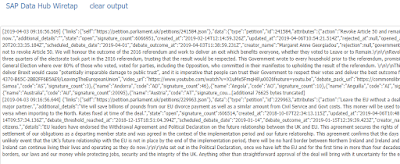

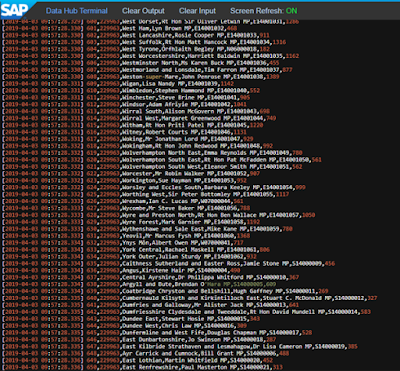

The difference with SAP HANA comes in steps 3-5 with the introduction of the virtual data model. Everyone who has worked on a data mart/warehouse project knows that the vast majority of the effort encapsulated in the above steps comes in trying to prepare the data to answer business requirements. With SAP HANA, we changed the game as follows:

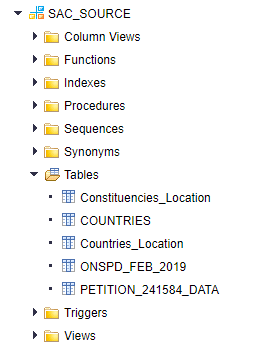

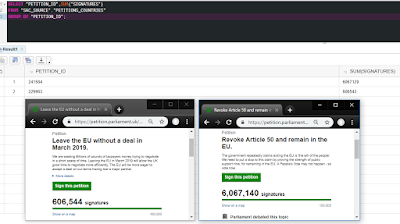

◈ Get the data – Simplify the process and do straight 1:1 replication from source to target only applying logic like filter conditions or excluding fields if a specific requirement (i.e. security) dictates the need to do so. I recommend to customers that they establish a principle that data gets stored “exactly once in its most raw format” in SAP HANA.

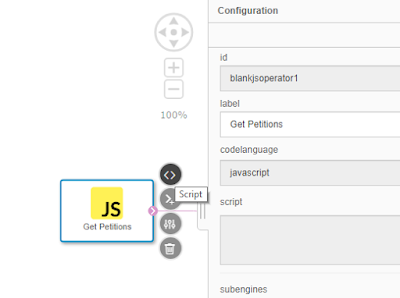

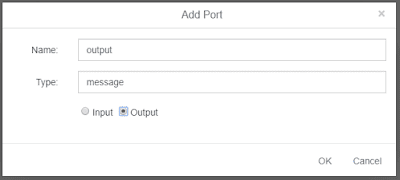

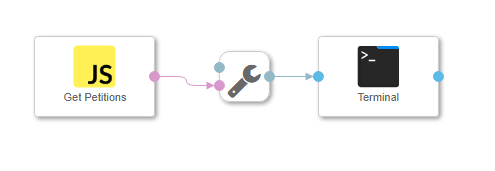

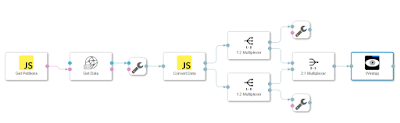

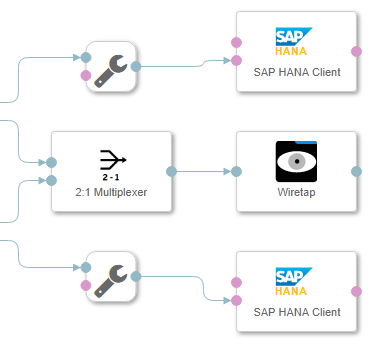

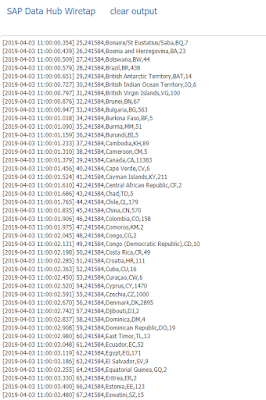

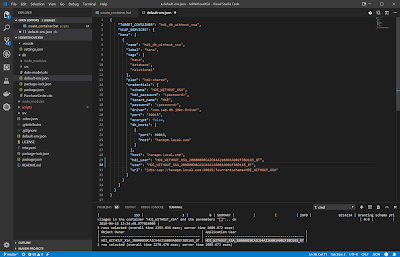

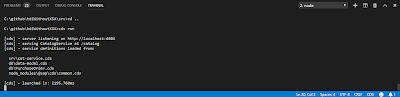

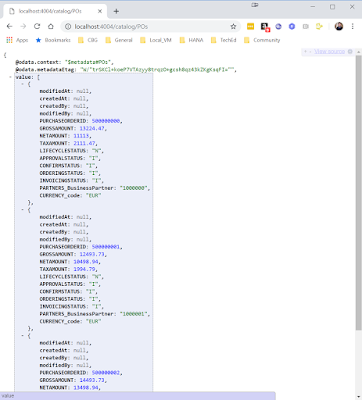

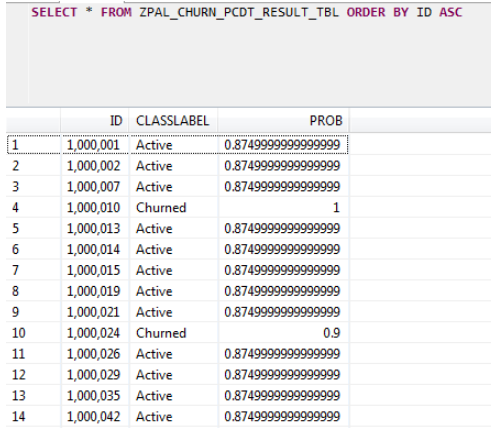

◈ Restructure the data for consumption – This is the not-so-secret sauce of SAP HANA; because of the previously mentioned technology we can now build a completely virtual data model (aka playlist). The graphic modeler in SAP HANA allows us to build an information model (special types of views) that integrates all of the raw data into a virtual structure that is ready for consumption. This means any aggregations, calculations, transformations, joins, etc. (including the often-requested currency conversion, unit of measure conversion, and hierarchies) all happen dynamically at the time of access. In-memory columnar enables this on-the-fly processing, and the result is that we have removed latency and redundancy from the process – the data is ready for consumption the moment it lands in its raw format. [Note: I recognize that there is still the possibility of extremely costly processing that need to be persisted and of course we need to manage those…but only on an exception basis!]

◈ Rework – While I love the above bullet, this is the one that gets me the most excited about the virtual data model. A former manager of mine used to talk about how you could “fail fast” with SAP HANA. This sounds counter-intuitive asking you to fail, but the reality is that business users rarely know exactly what they need on the first try. With the virtual data model, we can quickly get a model in front of the user, recognize the gaps (failures), and tease out the real business needs. This agility comes from the on-the-fly nature of the virtual data model and avoidance of waiting for overnight batch ETL jobs to complete. In addition, because we’ve brought over all fields from the source, it isn’t an issue when a new data element is requested – a very different experience from the past when we might have also had to change our extraction jobs. The result of these changes is that it becomes much more realistic for developers and business users to sit together and work through challenges in an agile manner.

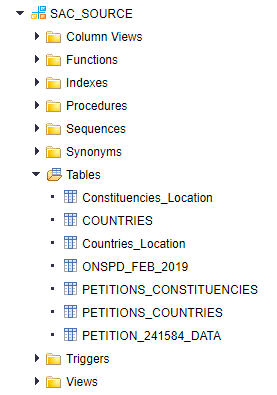

An example of a virtual data model built as a graphical calculation view in SAP HANA. In this relatively simple case, we have an integrated view of four tables that are modeled together to provide a single semantic layer for consumption.

I mentioned that this type of data mart was essentially the only use case in the first 6-9 months of general availability for SAP HANA. It is also a key part of the foundation for what differentiates applications on SAP HANA (consider the new data model and embedded analytics in SAP S/4HANA) – including the architecture of a full-blown data warehouse. Today, this remains one of the most common use cases for SAP HANA with thousands of customers using a virtual data model productively to provide real-time information to their business users. Use cases run the gamut in both type of data and purpose, but the message is clear that the SAP HANA approach with the virtual data model is a differentiated method of preparing business data for consumption.