I’m assuming you have a working Ubuntu 16.04 Server or Desktop installation, and you have the installation files by hand.

What we’ll do is:

What we’ll get is:

$ sudo apt-get install lxc lxc-templates wget bridge-utils

Before we create the network bridge we have to gather few information about our System.

We have to identify the nameserver, get information on the default gateway and at least make us familiar with our primary network interface.

$ sudo cat /etc/resolv.conf

# Dynamic resolv.conf(5) file for glibc resolver(3) generated by resolvconf(8)

# DO NOT EDIT THIS FILE BY HAND -- YOUR CHANGES WILL BE OVERWRITTEN

nameserver 192.168.1.1

The nameserver on my system is the machine with the IP address 192.168.1.1.

$ sudo route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default 192.168.1.1 0.0.0.0 UG 0 0 0 enp2s0

192.168.1.0 * 255.255.255.0 U 0 0 0 enp2s0

The default gateway on my system is the machine with the IP address 192.168.1.1.

$ sudo ifconfig

enp2s0 Link encap:Ethernet HWaddr d8:50:e6:51:af:aa

inet addr:192.168.1.236 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::216:3eff:fe4c:1d79/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:49 errors:0 dropped:0 overruns:0 frame:0

TX packets:13 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:12962 (12.9 KB) TX bytes:1478 (1.4 KB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

My primary network interface is enp2s0 with the IP address 192.168.1.236 and a subnet mask of 255.255.255.0. Now let’s create a network bridge by editing the file /etc/network/interfaces.

$ sudo nano /etc/network/interfaces

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

source /etc/network/interfaces.d/*

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto enp2s0

iface enp2s0 inet manual

# bridge for VMs

auto brlan

iface brlan inet static

address 192.168.1.10

netmask 255.255.255.0

gateway 192.168.1.1

bridge_ports enp2s0

bridge_stp off

bridge_fd 9

dns-nameservers 192.168.1.1

In the file /etc/network/interfaces we have changed the settings for the primary network interface to ‘manual’, which means bring up the interface and do nothing else. We’ve created a new ‘virtual’ interface named ‘brlan’ which is our network bridge. The settings address and netmask(subnet mask) have been show in the output ‘sudo ifconfig’ we did earlier. Settings for gateway was given to us by the output of ‘sudo route’. And finally the dns-nameservers attribute as obtained by the command ‘sudo cat /etc/resolv.conf’.

$ sudo service networking restart

If your on ssh you will loose the connection to the machine in that step, reconnect using the new IP address 192.168.1.10. We should check the result first.

$ sudo ifconfig

brlan Link encap:Ethernet HWaddr d8:50:e6:51:af:aa

inet addr:192.168.1.10 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::da50:e6ff:fe51:afaa/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:3167230 errors:0 dropped:2509 overruns:0 frame:0

TX packets:1203222 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:1696031356 (1.6 GB) TX bytes:17641075957 (17.6 GB)

enp2s0 Link encap:Ethernet HWaddr d8:50:e6:51:af:aa

inet6 addr: fe80::da50:e6ff:fe51:afaa/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:119725842 errors:0 dropped:759 overruns:0 frame:0

TX packets:365393544 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:88894128127 (88.8 GB) TX bytes:506161071665 (506.1 GB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:566 errors:0 dropped:0 overruns:0 frame:0

TX packets:566 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:56292 (56.2 KB) TX bytes:56292 (56.2 KB)

You should get output that looks something like this. Congrats to those that made it that far, you have an operational network bridge.

Edit the file /etc/default/lxc-net and set ‘USE_LXC_BRIDGE’ to ‘false’.

sudo nano /etc/default/lxc-net

# This file is auto-generated by lxc.postinst if it does not

# exist. Customizations will not be overridden.

# Leave USE_LXC_BRIDGE as "true" if you want to use lxcbr0 for your

# containers. Set to "false" if you'll use virbr0 or another existing

# bridge, or mavlan to your host's NIC.

USE_LXC_BRIDGE="false"

Edit the file /etc/lxc/default.conf and make LXC use our previously created bridge ‘brlan’.

$ sudo nano /etc/lxc/default.conf

lxc.network.type = veth

lxc.network.link = brlan

lxc.network.flags = up

lxc.network.hwaddr = 00:16:3e:xx:xx:xx

Here is a good point in time to reboot the machine.

$ sudo reboot

It’s time to set up our hanaexpress2 LXC container.

$ sudo lxc-create -n hanaexpress2 -t ubuntu

Checking cache download in /var/cache/lxc/xenial/rootfs-amd64 ...

Installing packages in template: apt-transport-https,ssh,vim,language-pack-en

Downloading ubuntu xenial minimal ...

I: Retrieving InRelease

I: Checking Release signature

I: Valid Release signature (key id 790BC7277767219C42C86F933B4FE6ACC0B21F32)

I: Retrieving Packages

.

.

.

This command will create the container and install a minimal Ubuntu into it. This can take some time because all packages are downloaded. After that has completed – start your hanaexpress2 host and jump into it. Be warned: You’ll be root user if you enter the container like that.

$ sudo lxc-start -n hanaexpress2

$ sudo lxc-attach -n hanaexpress2

root@hanaexpress2:/#

If you have an dhcp enabled router on your network you will already have an IP address. Otherwise you have to configure it in the file ‘/etc/network/interfaces’, just consult the Ubuntu Server Guide.

root@hanaexpress2:/# ifconfig

eth0 Link encap:Ethernet HWaddr 00:16:3e:aa:98:15

inet addr:192.168.1.237 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::216:3eff:feaa:9815/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1284 errors:0 dropped:0 overruns:0 frame:0

TX packets:43 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:232489 (232.4 KB) TX bytes:8483 (8.4 KB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

Another thing to do is to set up the correct timezone for your machine.

root@hanaexpress2:/# dpkg-reconfigure tzdata

IP address provided by router, timezone set – let’s install Hana Express !

First of all install dependencies of the installer.

root@hanaexpress2:/# apt-get install uuid-runtime wget openssl libpam-cracklib libltdl7 libaio1 unzip libnuma1 csh curl openjdk-8-jre-headless

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

ca-certificates ca-certificates-java cracklib-runtime dbus fontconfig-config fonts-dejavu-core java-common

libavahi-client3 libavahi-common-data libavahi-common3 libcap-ng0 libcrack2 libcups2 libdbus-1-3 libfontconfig1

libfreetype6 libjpeg-turbo8 libjpeg8 liblcms2-2 libnspr4 libnss3 libnss3-nssdb libpcsclite1 libx11-6 libx11-data

libxau6 libxcb1 libxdmcp6 libxext6 libxi6 libxrender1 libxtst6 wamerican x11-common

Suggested packages:

dbus-user-session | dbus-x11 default-jre cups-common liblcms2-utils pcscd openjdk-8-jre-jamvm libnss-mdns

fonts-dejavu-extra fonts-ipafont-gothic fonts-ipafont-mincho ttf-wqy-microhei | ttf-wqy-zenhei fonts-indic zip

The following NEW packages will be installed:

ca-certificates ca-certificates-java cracklib-runtime csh curl dbus fontconfig-config fonts-dejavu-core

java-common libaio1 libavahi-client3 libavahi-common-data libavahi-common3 libcap-ng0 libcrack2 libcups2

libdbus-1-3 libfontconfig1 libfreetype6 libjpeg-turbo8 libjpeg8 liblcms2-2 libltdl7 libnspr4 libnss3

libnss3-nssdb libnuma1 libpam-cracklib libpcsclite1 libx11-6 libx11-data libxau6 libxcb1 libxdmcp6 libxext6

libxi6 libxrender1 libxtst6 openjdk-8-jre-headless openssl unzip uuid-runtime wamerican wget x11-common

0 upgraded, 45 newly installed, 0 to remove and 0 not upgraded.

Need to get 33.5 MB of archives.

After this operation, 121 MB of additional disk space will be used.

Do you want to continue? [Y/n]

Now jump out of the container, we have to copy the installation files to it.

root@hanaexpress2:/# exit

$ cd /home/halderm

$ sudo cp -v hx* /var/lib/lxc/hanaexpress2/rootfs/opt/

'hxe.tgz' -> '/var/lib/lxc/hanaexpress2/rootfs/opt/hxe.tgz'

'hxexsa.tgz' -> '/var/lib/lxc/hanaexpress2/rootfs/opt/hxexsa.tgz'

After we’d copy the files to the filesystem of our hanaexpress2 host, we’re going to jump back into the container and extract them there. As you can see above we’d copy them to the ‘/opt’ directory of our hanaexpress2 host. Please use the ‘/opt’ directory or any other user accessible directory to avoid any permission issues of the installer.

$ sudo lxc-attach -n hanaexpress2

root@hanaexpress2:/# cd /opt

root@hanaexpress2:/opt# tar xzf hxe.tgz

root@hanaexpress2:/opt# tar xzf hxexsa.tgz

root@hanaexpress2:/opt# chmod -R 777 setup_hxe.sh HANA_EXPRESS_20

Let the games begin

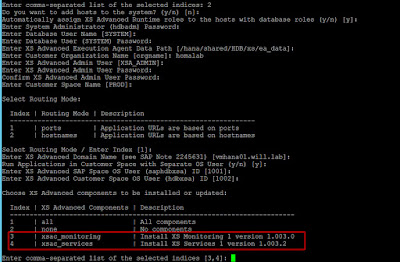

root@hanaexpress2:/opt# ./setup_hxe.sh

Enter HANA, express edition installer root directory:

Hint: <extracted_path>/HANA_EXPRESS_20

HANA, express edition installer root directory [/opt/HANA_EXPRESS_20]:

Enter component to install:

server - HANA server + Application Function Library

all - HANA server, Application Function Library, Extended Services + apps (XSA)

Component [all]:

Enter local host name [hanaexpress2]:

Enter SAP HANA system ID [HXE]:

Enter HANA instance number [90]:

Enter master password:

Confirm "master" password:

##############################################################################

Summary before execution

##############################################################################

HANA, express edition installer : /opt/HANA_EXPRESS_20

Component(s) to install : HANA server, Application Function Library, and Extended Services + apps (XSA)

Host name : hanaexpress2

HANA system ID : HXE

HANA instance number : 90

Master password : ********

Proceed with installation? (Y/N) :

What we’ll do is:

- Install required packages,

- create a network bridge,

- do some LXC config stuff,

- create a LXC container and

- install HANA Express Edition in that container.

What we’ll get is:

- A Hana Express Edition working in a Linux Contianer,

- all advantages of an isolated Virtual Machine,

- a much smaller VM memory footprint,

- and near bare metal speed.

Installing required Packages

$ sudo apt-get install lxc lxc-templates wget bridge-utils

Creating a Network Bridge

Before we create the network bridge we have to gather few information about our System.

We have to identify the nameserver, get information on the default gateway and at least make us familiar with our primary network interface.

$ sudo cat /etc/resolv.conf

# Dynamic resolv.conf(5) file for glibc resolver(3) generated by resolvconf(8)

# DO NOT EDIT THIS FILE BY HAND -- YOUR CHANGES WILL BE OVERWRITTEN

nameserver 192.168.1.1

The nameserver on my system is the machine with the IP address 192.168.1.1.

$ sudo route

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

default 192.168.1.1 0.0.0.0 UG 0 0 0 enp2s0

192.168.1.0 * 255.255.255.0 U 0 0 0 enp2s0

The default gateway on my system is the machine with the IP address 192.168.1.1.

$ sudo ifconfig

enp2s0 Link encap:Ethernet HWaddr d8:50:e6:51:af:aa

inet addr:192.168.1.236 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::216:3eff:fe4c:1d79/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:49 errors:0 dropped:0 overruns:0 frame:0

TX packets:13 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:12962 (12.9 KB) TX bytes:1478 (1.4 KB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

My primary network interface is enp2s0 with the IP address 192.168.1.236 and a subnet mask of 255.255.255.0. Now let’s create a network bridge by editing the file /etc/network/interfaces.

$ sudo nano /etc/network/interfaces

# This file describes the network interfaces available on your system

# and how to activate them. For more information, see interfaces(5).

source /etc/network/interfaces.d/*

# The loopback network interface

auto lo

iface lo inet loopback

# The primary network interface

auto enp2s0

iface enp2s0 inet manual

# bridge for VMs

auto brlan

iface brlan inet static

address 192.168.1.10

netmask 255.255.255.0

gateway 192.168.1.1

bridge_ports enp2s0

bridge_stp off

bridge_fd 9

dns-nameservers 192.168.1.1

In the file /etc/network/interfaces we have changed the settings for the primary network interface to ‘manual’, which means bring up the interface and do nothing else. We’ve created a new ‘virtual’ interface named ‘brlan’ which is our network bridge. The settings address and netmask(subnet mask) have been show in the output ‘sudo ifconfig’ we did earlier. Settings for gateway was given to us by the output of ‘sudo route’. And finally the dns-nameservers attribute as obtained by the command ‘sudo cat /etc/resolv.conf’.

Fire up the bridge

$ sudo service networking restart

If your on ssh you will loose the connection to the machine in that step, reconnect using the new IP address 192.168.1.10. We should check the result first.

$ sudo ifconfig

brlan Link encap:Ethernet HWaddr d8:50:e6:51:af:aa

inet addr:192.168.1.10 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::da50:e6ff:fe51:afaa/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:3167230 errors:0 dropped:2509 overruns:0 frame:0

TX packets:1203222 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:1696031356 (1.6 GB) TX bytes:17641075957 (17.6 GB)

enp2s0 Link encap:Ethernet HWaddr d8:50:e6:51:af:aa

inet6 addr: fe80::da50:e6ff:fe51:afaa/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:119725842 errors:0 dropped:759 overruns:0 frame:0

TX packets:365393544 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:88894128127 (88.8 GB) TX bytes:506161071665 (506.1 GB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:566 errors:0 dropped:0 overruns:0 frame:0

TX packets:566 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:56292 (56.2 KB) TX bytes:56292 (56.2 KB)

You should get output that looks something like this. Congrats to those that made it that far, you have an operational network bridge.

Configuring LXC

Edit the file /etc/default/lxc-net and set ‘USE_LXC_BRIDGE’ to ‘false’.

sudo nano /etc/default/lxc-net

# This file is auto-generated by lxc.postinst if it does not

# exist. Customizations will not be overridden.

# Leave USE_LXC_BRIDGE as "true" if you want to use lxcbr0 for your

# containers. Set to "false" if you'll use virbr0 or another existing

# bridge, or mavlan to your host's NIC.

USE_LXC_BRIDGE="false"

Edit the file /etc/lxc/default.conf and make LXC use our previously created bridge ‘brlan’.

$ sudo nano /etc/lxc/default.conf

lxc.network.type = veth

lxc.network.link = brlan

lxc.network.flags = up

lxc.network.hwaddr = 00:16:3e:xx:xx:xx

Here is a good point in time to reboot the machine.

$ sudo reboot

Creating the LXC container

It’s time to set up our hanaexpress2 LXC container.

$ sudo lxc-create -n hanaexpress2 -t ubuntu

Checking cache download in /var/cache/lxc/xenial/rootfs-amd64 ...

Installing packages in template: apt-transport-https,ssh,vim,language-pack-en

Downloading ubuntu xenial minimal ...

I: Retrieving InRelease

I: Checking Release signature

I: Valid Release signature (key id 790BC7277767219C42C86F933B4FE6ACC0B21F32)

I: Retrieving Packages

.

.

.

This command will create the container and install a minimal Ubuntu into it. This can take some time because all packages are downloaded. After that has completed – start your hanaexpress2 host and jump into it. Be warned: You’ll be root user if you enter the container like that.

$ sudo lxc-start -n hanaexpress2

$ sudo lxc-attach -n hanaexpress2

root@hanaexpress2:/#

If you have an dhcp enabled router on your network you will already have an IP address. Otherwise you have to configure it in the file ‘/etc/network/interfaces’, just consult the Ubuntu Server Guide.

root@hanaexpress2:/# ifconfig

eth0 Link encap:Ethernet HWaddr 00:16:3e:aa:98:15

inet addr:192.168.1.237 Bcast:192.168.1.255 Mask:255.255.255.0

inet6 addr: fe80::216:3eff:feaa:9815/64 Scope:Link

UP BROADCAST RUNNING MULTICAST MTU:1500 Metric:1

RX packets:1284 errors:0 dropped:0 overruns:0 frame:0

TX packets:43 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1000

RX bytes:232489 (232.4 KB) TX bytes:8483 (8.4 KB)

lo Link encap:Local Loopback

inet addr:127.0.0.1 Mask:255.0.0.0

inet6 addr: ::1/128 Scope:Host

UP LOOPBACK RUNNING MTU:65536 Metric:1

RX packets:0 errors:0 dropped:0 overruns:0 frame:0

TX packets:0 errors:0 dropped:0 overruns:0 carrier:0

collisions:0 txqueuelen:1

RX bytes:0 (0.0 B) TX bytes:0 (0.0 B)

Another thing to do is to set up the correct timezone for your machine.

root@hanaexpress2:/# dpkg-reconfigure tzdata

IP address provided by router, timezone set – let’s install Hana Express !

Installing HANA Express

First of all install dependencies of the installer.

root@hanaexpress2:/# apt-get install uuid-runtime wget openssl libpam-cracklib libltdl7 libaio1 unzip libnuma1 csh curl openjdk-8-jre-headless

Reading package lists... Done

Building dependency tree

Reading state information... Done

The following additional packages will be installed:

ca-certificates ca-certificates-java cracklib-runtime dbus fontconfig-config fonts-dejavu-core java-common

libavahi-client3 libavahi-common-data libavahi-common3 libcap-ng0 libcrack2 libcups2 libdbus-1-3 libfontconfig1

libfreetype6 libjpeg-turbo8 libjpeg8 liblcms2-2 libnspr4 libnss3 libnss3-nssdb libpcsclite1 libx11-6 libx11-data

libxau6 libxcb1 libxdmcp6 libxext6 libxi6 libxrender1 libxtst6 wamerican x11-common

Suggested packages:

dbus-user-session | dbus-x11 default-jre cups-common liblcms2-utils pcscd openjdk-8-jre-jamvm libnss-mdns

fonts-dejavu-extra fonts-ipafont-gothic fonts-ipafont-mincho ttf-wqy-microhei | ttf-wqy-zenhei fonts-indic zip

The following NEW packages will be installed:

ca-certificates ca-certificates-java cracklib-runtime csh curl dbus fontconfig-config fonts-dejavu-core

java-common libaio1 libavahi-client3 libavahi-common-data libavahi-common3 libcap-ng0 libcrack2 libcups2

libdbus-1-3 libfontconfig1 libfreetype6 libjpeg-turbo8 libjpeg8 liblcms2-2 libltdl7 libnspr4 libnss3

libnss3-nssdb libnuma1 libpam-cracklib libpcsclite1 libx11-6 libx11-data libxau6 libxcb1 libxdmcp6 libxext6

libxi6 libxrender1 libxtst6 openjdk-8-jre-headless openssl unzip uuid-runtime wamerican wget x11-common

0 upgraded, 45 newly installed, 0 to remove and 0 not upgraded.

Need to get 33.5 MB of archives.

After this operation, 121 MB of additional disk space will be used.

Do you want to continue? [Y/n]

Now jump out of the container, we have to copy the installation files to it.

root@hanaexpress2:/# exit

$ cd /home/halderm

$ sudo cp -v hx* /var/lib/lxc/hanaexpress2/rootfs/opt/

'hxe.tgz' -> '/var/lib/lxc/hanaexpress2/rootfs/opt/hxe.tgz'

'hxexsa.tgz' -> '/var/lib/lxc/hanaexpress2/rootfs/opt/hxexsa.tgz'

After we’d copy the files to the filesystem of our hanaexpress2 host, we’re going to jump back into the container and extract them there. As you can see above we’d copy them to the ‘/opt’ directory of our hanaexpress2 host. Please use the ‘/opt’ directory or any other user accessible directory to avoid any permission issues of the installer.

$ sudo lxc-attach -n hanaexpress2

root@hanaexpress2:/# cd /opt

root@hanaexpress2:/opt# tar xzf hxe.tgz

root@hanaexpress2:/opt# tar xzf hxexsa.tgz

root@hanaexpress2:/opt# chmod -R 777 setup_hxe.sh HANA_EXPRESS_20

Let the games begin

root@hanaexpress2:/opt# ./setup_hxe.sh

Enter HANA, express edition installer root directory:

Hint: <extracted_path>/HANA_EXPRESS_20

HANA, express edition installer root directory [/opt/HANA_EXPRESS_20]:

Enter component to install:

server - HANA server + Application Function Library

all - HANA server, Application Function Library, Extended Services + apps (XSA)

Component [all]:

Enter local host name [hanaexpress2]:

Enter SAP HANA system ID [HXE]:

Enter HANA instance number [90]:

Enter master password:

Confirm "master" password:

##############################################################################

Summary before execution

##############################################################################

HANA, express edition installer : /opt/HANA_EXPRESS_20

Component(s) to install : HANA server, Application Function Library, and Extended Services + apps (XSA)

Host name : hanaexpress2

HANA system ID : HXE

HANA instance number : 90

Master password : ********

Proceed with installation? (Y/N) :