A practical example of HANA optimization

In this blog, I’ll try to recap my journey through the land of SELECT’s and internal tables towards the realm of HANA. I wanted to take a slow-performing ABAP, not something from a tutorial or playground module from SAP, but something “real”, tangible, a proper “pain point”, and describe how it can be converted to using HANA view(s).

It became clear to me while writing up my experiences that this is not a task easily undertaken. First of all, ripping apart an existing ABAP – no matter how “simple” and “straightforward” it can appear at first sight, is not an easy job. Secondly, wrapping it all up in a comprehensible blog, complete with bullet-proof points and comprehensible screenshots, is aneven more difficult achievement. At times, I was not even sure if this was doable, or whether anyone could recap any learnings from what I had been through. Surely, there are more straightforward tutorial and examples out there?

But then again, why not? I finally decided that the only format I could give this document, is in the way of “key learnings” and general tips. I learned a lot, and hopefully I’ll be able to pass on some of the ideas I had along the way to others. I’m not going to discuss the original ABAP code in all its gory detail, this would possibly help you to understand the inner workings of the original program but would take ages. Instead, I hope to convey the general idea of the program to you, and show how you can translate it into HANA. I’ll explain the three view types, attribute, analytical, and calculation, and show you how I used them (and, more importantly, why I used them). I’ll also show some of the pitfalls and tips along the way, to help you avoid the same.

And, finally, this is my way of doing it. That’s a disclaimer, by the way, not a nod to Sinatra. There might be (and likely is!) better ways of solving the particular issue I had, and better ways of avoiding pitfalls. There might even be new issues arising from the way I implemented my solution – feel free to comment. I’m here to learn as much as you are.

How to find a suitable candidate

Some people use speed dating, others register to various internet-based… oh, sorry. We were talking about HANA-optimization, not marriage prospects.

I found a nice program in dire need of being optimized by executing ST03N in a production system. ST03N is the workload monitor, sort of the “health display” of your Netweaver system. It has lots of cool features; among others is an option to look at the statistics for the most resource-demanding applications (or processes) running in that particular systems. Boy, was I happy when one of the top offenders turned out to be a custom-made ABAP! I quickly decided to rip that piece of underachieving code to pieces, replacing every sub-performing line with calls to some sleek & cool HANA views. I envisioned my re-worked ABAP/HANA hybrid development as a sleek Ferrari, blasting past the sluggish old ABAP original. And, of course, it would be a fun, easy-piecy job to do.

I would bitterly regret that decision.

Wow, it’s so…. BIG!!

Looking back, I can honestly say I made a huge mistake selecting this particular development for HANA optimization. Don’t get me wrong; I learned a lot along the way, but for a first-time project, something smaller and more manageable would have been far more suitable. I’ll repeat this in the key learnings session towards the end, but here goes: pick a development that spans 5-10 tables, not more. It’s far better to scale a small ridge first, before setting your sight on Everest.

And the problem with large ABAP developments (particularly old, somewhat chaotic ABAP developments) is that they’re unwieldy, erratic, chaotic, and obfuscated. Of course, this provides more opportunity for optimization, but you might just end up completely overwhelmed, and give up. Or have a heart attack. Or attempt to stick your laptop down the shredder (it won’t fit. Not even the newer, sleeker models with solid state drives. Trust me). A look at Mt. Everest from a distance of roughly 2.5 million milliseconds:

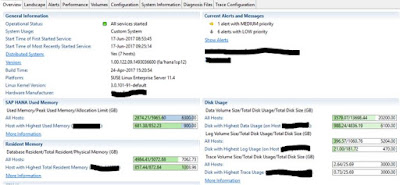

This, then, is the offending development; a seemingly inconspicuous report that calculates transportation costs. As can be seen, this is the third most charging transaction/program, measured in processing time as seen above. The screenshot is from a Productive environment.

I decided to look closer at the performance. Unable to do a proper runtime analysis in a production environment, I had to resort to a QA system, where data volumes were far lower. Still, I found some promising details:

When looking at the Hit list, we see that a lot of time is spent doing unit conversions:

Quite a bit of time is spent calling functions for unit conversions. This is actually one of HANA’s quick wins: you can avoid calling unit or currency conversions bozillions of times, simply by letting the HANA engine handle it.

So far, so good. I had my candidate.

How not to do things.

My first mistake was to go through the ABAP code from START-OF-SELECTION onwards, slashing out at each and every SELECT statement with my HANA machete, attempting to replace them all with HANA views, one by one. Well, not actually one by one, but more like merging related tables into attribute views, then collating these into analytical views, sort of hoping that I’d reach the summit before sunset. As by magic. This, of course, didn’t work. As any half-decent mountaineer knows, a bit of planning always pays off. Also, when it comes to old, grumpy ABAP’s, it’s not always obvious how a

development works.

As programs go, this one was actually quite well structured. Sort of. It consisted of roughly 15 FORM’s, where data was being selected into internal tables, followed by one final FORM merging everything together into the final display table used for the ALV. The problem was that the top-down approach which I initially started out with, failed to take into account everything that went on in the final FORM. Here, a LOOP at the “main” internal table (containing shipments, from tables VTTK/VTTP), contained READ statements for other internal tables (such as deliveries). By looking at these READ’s, I was shocked to see that in some cases, a missing corresponding entry in a secondary internal table would result in a CONTINUE statement.

Say what?

Consider the following:

LOOP at outer_table into work_area.

READ inner_table WITH KEY so_and_so_from_outer_table BINARY SEARCH.

IF sy-subrc <> 0.

CONTINUE.

ENDIF.

MOVE bunch_of_fields to final_internal_table.

ENDLOOP.

What happens here is that whenever there’s no corresponding entry in the inner table, the LOOP continues. What it really is, is an INNER JOIN on the two tables. Only if both contain corresponding entries, is the data collated into the resulting final table.

And this, of course, translates nicely into HANA. Problem is, you won’t “get it” until you arrive at that final piece of logic, and by then, you realize you have to re-structure your whole suite of HANA views. Or at least some of them.

But let’s not jump to conclusions. Let’s start with the beginning.

The original program structure

I mentioned the original program selected data from a range of tables, stored this in internal tables, then collating everything into a final table destined for ALV fame. This is a pattern followed by countless top-down reporting programs, and one which should – at least theoretically – be fairly simple to convert to HANA views, at least some portions of it.

I’m not going to go into lengthy (and boring) details about the inner workings of the program. I actually thought of doing so, but rejected the idea after a feeble attempt to recap all FORM’s and each and every SELECT. Instead, I’ll show you the main structure, since this is by far the easiest way to understand what’s going on. It also helps to comprehend why I structured my views the way I did. Here goes:

- SELECT Shipments (headers and items) from VTTK and VTTP

- SELECT Deliveries from LIKP for all the shipments

- Do some unit conversions on a variety of fields

- SELECT various other values from various lookup tables, for shipments and deliveries

- SELECT some other aggregated values from a range of interrelated tables, based on already selected shipments or deliveries

- Merge everything (which is currently stored in internal tables) into a final result table by LOOPing at the main shipment internal table and doing READ TABLE for everything else

- Display the resulting internal table as an ALV

The above looks simple enough. The problem is we are talking roughly 15 different FORMs, each with selections from 1-2 specific DB tables. Some of the resulting internal tables are aggregations, not directly related to the shipments or deliveries, but based on other terms. This complicates a bit.

The full list of FORMS looks like this:

As this is not a step-by-step blog outlining every single change made when transforming the above ABAP to HANA, I’ll instead focus on some key steps and learnings. The above should nevertheless give a decent impression of what the original development does, and how it selects its data.

Step 1: Start by looking at the final output.

What does the original program do? More often than not, the best way of understanding this is by looking at the final ALV table or output. It usually gives you a general idea on how to structure your main view, which will usually be an analytical or (more often) calculation view. In our case, the final output shows “shipments with related deliveries and some other related info”. The main LOOP in the final FORM that builds this internal table, is on an internal table containing data from VTTK and VTTP. This actually provides a nice tip on how your “data foundation” should look like. In general, the “main” tables of the final output are decent candidates for the “data foundation” of an analytical view. The “lookup” tables will be the JOINed tables of the same analytical view, or form part of their own analytical views if you need to aggregate anything.

By looking at the program structure above, we can already deduce that VTTK-VTTP will form a major part of the “data foundation”, likely of an analytical view. Likewise, since there is a direct relation to deliveries (table LIKP) we may be able to include LIKP in the data foundation of our main view.

And, before you ask: how do we know our main view will be an analytical view? Because we probably have to aggregate something. Usually, there’s no point outputting this kind of info without displaying some SUMmed quantities or amounts, like total delivery quantity per shipment, or total billed amount per customer. So, it’s fair to assume we’l be building at least one analytical view, somewhere along.

Of course, we’ll possibly end up needing a calculation view in the end. This is also a simple deduction: if we need more than one analytical view, we’ll need to JOIN these in a… calculation view, simply because you can’t JOIN an analytical view inside another analytical view. Too bad, really.

Step 2: create attribute views for simple hierarchies (including single tables)

Often, you will see simple structures like header-item, or a limited number of tables related to each other using referential joins, where we just need simple fields as output, without any measures (aggregations). These can easily go into attribute views. The same goes for single tables. The reason for creating attribute views for single tables is simple: this allows you to include then in analytical views, using JOINs. Other benefits of using attribute views are that you can set filters, and restrict the number of fields selected.

Example 1: attribute view for one table, filter set on SPRAS:

Example 2: attribute view including several tables

Based on my program logic, I ended up with the following list of attribute views:

Most of these contain one single table. Also, most of them have a filter value on MANDT and SPRAS (I could also have defined input parameters – however, I came across an interesting “feature” regarding the client field – MANDT – more about that below).

Step 3: create analytical views for aggregations, even within one table

Now, the time has come for creating analytical views for anything that is, um, aggregated.

Example 1: analytical view on LIPS-LFIMG (delivery quantity):

This view simply aggregates the delivery item quantity LFIMG for all delivery items belonging to the same delivery number (VBELN). It contains one table. LFIMG is set to a measure in the Semantics section.

Example 2 : analytical view on VFKP-VFKN which aggregates NETWR on REBEL:

This is a view involving two tables, VFKP and VFKN. Here, the measure NETWR is accumulated per REBEL (VFKP-REBEL, not VFKN-REBEL which is visible in the screenshot. We also need VFKN-REBEL since it is referenced in another view, hence showing).

Example 3: the “main” analytical view, combining all my attribute views with a multi-table “data foundation”:

Here, we see several tables in the analytical view’s Data Foundation. Do not make the misconception that a data foundation can only contain one table. In the above view, the data foundation comprises of four tables:

This foundation is then JOINed with the attribute views to provide the backbone of the main result table.

We also see some calculated fields:

This, by the way, is one of the staples of HANA – the ability to do currency and unit conversions “on the fly”.

Step 4: finish by creating calculation views – if necessary.

Calculation views are needed if you have created several analytical views, and need to join these, such as in my example. I already have a “main” analytical view (above), and also two others, since I needed to aggregate various quantities for deliveries etc.

This means I cannot simply stay with my analytical “main” view, since analytical views only accept attribute views in the JOIN section…

My resulting calculation view looks like this:

Here, I start with my main view, containing my “main loop” tables and the attribute views. I then JOIN a second analytical view, Z_AN_DEL_QUAN, to get delivery quantities aggregated by delivery number. So far, so good. In my second JOIN, I add another attribute view, showing a fairly complex constellation of tables:

The reason for this is more for simplicity. The above collection of tables deserved their own attribute view, and the JOIN happens in the calculation view (instead of joining into the “main” analytical view) because it provides a better way of structuring the views. Less clutter, more readability.

The final JOIN (“Join_3”) sees the result of the two previous JOINs being joined to the last analytical view:

As can be seen, the relationship is based on two fields, FKNUM and VBELN.

Step 5: Validate along the way.

This is something you should do with all views; look at the raw data output and try to spot any issues.

Step 6: create an ABAP external dictionary view

One thing to know about creating external views for ABAP, is that the mapping suggestions are not 100% correct for some fields, notably date, time, currency and quantity fields. These will have to be mapped manually (below screenshot shows some of them, but not all):

Use the original DD tables in the ABAP dictionary when correcting the mapping proposed by the HANA Studio, which will usually be CHAR.

Another thing to beware of is that HANA has an ambiguous attitude towards reference fields (at least as of SP6):

It turns out we haven’t specified the reference fields for the amounts and quantities in our analytical view. However, there is no obvious way of doing this… a post elsewhere on SCN seems to suggest there is currently no way of handling this. Thus, we cannot use QUAN fields in external ABAP views for the time being – all our quantity and currency fields have to be set as DECIMAL.

Wrapping up: calling the external view from ABAP

Finally, at the end of a lengthy process, we can swap all the FORM’s mentioned at the start with a simple SELECT on our newly defined

view:

How immensely rewarding!

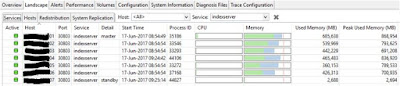

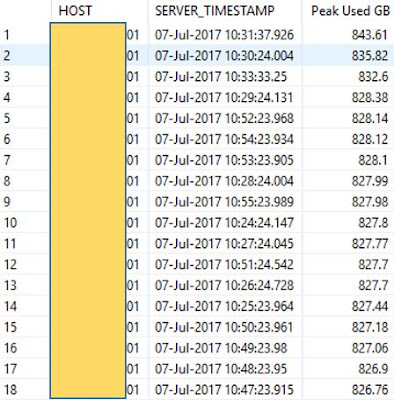

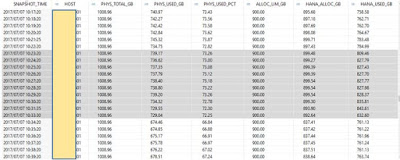

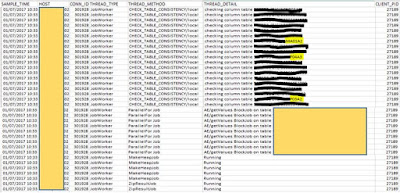

Performance analysis

For the performance analysis, transaction SAT was run for both the developments (original and HANA view-based version). A few pre-runs were done immediately before the measurements to fill buffers etc.

Result:

Original development:

New HANA-based development:

We see that the run time of our new development is roughly 13% of that of the original. Quite a nice improvement. Our system contains very limited data volumes, and higher-volume environments might show different savings – perhaps even greater.

Key learnings

And here, finally, I’ll list a few of the key learnings gathered during this exercise. This is not an exhaustive list, but hopefully useful…

- Revise the original ABAP code first; there might be issues of bad design (refactoring needed), sub-optimal performance (bad SELECT’s, badly designed LOOP logic, wrong type of internal tables etc.), plus subsequent modifications may have left the ABAP code suffering in other ways (I came across an internal table that was no longer used – the code reading it was commented – but the original SELECT was still active… a second case involved exhaustive unit conversions which were then never displayed in the final output list. Somewhere down the line, they had been omitted – but the call to the conversion functions was still active…)

- Simplify HANA view creation by starting with attribute views, then analytical views, then (if needed) calculation views. Do not attempt to make the views too complicated – it’s better to split logic up into several views. I don’t have performance data to back this up, but the application will be easier to maintain – also if you have to revise and re-test a specific view.

- As for view types: you’ll need attribute views for single tables and simple relations, as long as there are no aggregations involved. Analytical views for aggregating data (think: sums). And calculation views if you need to JOIN one analytical view to at least one other.

- The DATA Foundation of your analytical view can accommodate more than one table. In fact, it’s perfectly OK to JOIN tables to your “main” data foundation table, directly in the “data foundation” itself – instead of joining them in the “Logical join”.

- Do iterative testing – use the data preview in HANA to verify the output of each view separately, instead of waiting until everything is assembled in a final calculation view.

- Take note that MANDT is not a “forgettable” field, like in ABAP. Here is an example:

View Z2_AT_ROUTE_DESC without filter on MANDT:

Data preview:

We see duplicates. This is regardless of the fact that the session client has been defined in the view’s Semantics:

Setting the default client to “103” resolves the issue:

Data preview:

- Make sure to distinguish about Attributes and Measures – quantities and amounts could come out wrongly if this is erroneously set in the Semantics section. For instance, if you have a calculation view with more than one JOIN section, specifying quantities/amounts as “measures” everywhere can end up giving erroneous sums – based on your joins. If a quantity or amount is a measure in an originating view, it should be defined as an attribute if the purpose is to just keep the original figure through all your joins.

- Don’t rely on being able to define reference fields for external (ABAP) views in HANA (using QUAN or CURR fields) – it doesn’t work, even though the error messages say you have to do it. You’ll have to resort to specifying these as DECIMAL instead.

- Bring enough torches when entering a cave!