This blog is about how to transport a User-Role between two Tenants within one instance of SAP HANA 2.0 Express.

To enlarge the pictures press STRG++ to Zoom In and STRG– to Zoom Out.

How to download & install SAP HANA Express is explained in this Youtube-Video

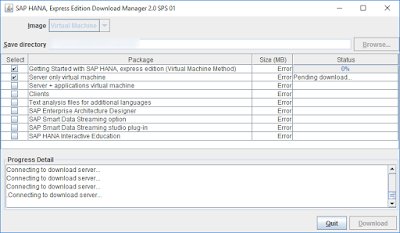

I am using the Package “Server only virtual machine”.

To enlarge the pictures press STRG++ to Zoom In and STRG– to Zoom Out.

How to download & install SAP HANA Express is explained in this Youtube-Video

I am using the Package “Server only virtual machine”.

To have access to the VM Ware-Server via hostname hxehost, the local hosts-file C:\windows\system32\drivers\etc\hosts needs to be adapted.

hxehost-src and hxehost-trg are two virtual hostnames which are used by SAP HANA’s Webdispatcher to forward the request to the Tenant Database.

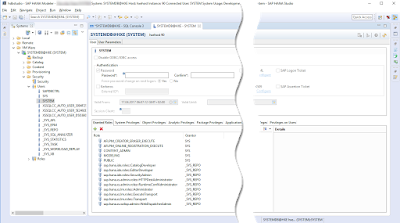

Once the instance is registered in SAP HANA Studio the two Tenant Databases are created.

The statements are:

— Create Source Tenant Database

create database src system user password ***;

— Create Target Tenant Database

create database trg system user password ***;

— Configure Routing to Tenant Databases

— SRC

ALTER SYSTEM ALTER CONFIGURATION (‘xsengine.ini’, ‘database’, ‘SRC’)

SET (‘public_urls’, ‘http_url’) = ‘http://hxehost-src:8090’ WITH RECONFIGURE;

— TRG

ALTER SYSTEM ALTER CONFIGURATION (‘xsengine.ini’, ‘database’, ‘TRG’)

SET (‘public_urls’, ‘http_url’) = ‘http://hxehost-trg:8090’ WITH RECONFIGURE;

— Validate proper configuration of webdispatcher

select key, value, layer_name

from sys.M_inifile_contents

where file_name = ‘webdispatcher.ini’

and section = ‘profile’

and key like ‘wdisp/system%’

The output of the select-statement shows this:

That means, the Webdispatcher is contacted on port 8090. The hostname used is hxehost-src. So, it will forward the request to tenant SRC. This is described in detail in the Database Admin Guide, chapter “12.1.8.3 Configure HTTP(S) Access to Tenant Databases via SAP HANA XS Classic”.

For the database to be able to redirect the hosts internally, I have put the virtual hostnames also in the file /etc/hosts on the VM Ware host.

To be able for the Tenant Databases to logon to the XS Classic engine, a set of Roles is required which are not available on the Tenant Databases right away. To make them available, I use an initial logon to the XS Classic engine of the System Database. For that user SYSTEM of the System Database requires additional Roles.

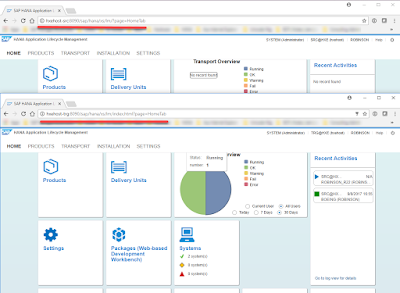

After granting those roles, user SYSTEM is able to logon to the HANA Application Lifecycle Management on the System Database.

After the login, the required Roles are available also on each Tenant Database.

Please note: The virtual hostnames hxehost-src and hxehost-trg are not used for registration in SAP HANA Studio. For that I use hostname hxehost and the name of the Tenant Database.

Once the users of Tenant Database SRC and TRG have the required Roles assigned a login to the Tenant-Specific XS Classic engine should be possible.

Now the transport route needs to be configured. Native transport on HANA works by pulling on the target from the source. That means, the transport route has to be configured on Tenant TRG.

Click on Transport -> http://hxehost-trg:8090/sap/hana/xs/lm/index.html?page=SystemsTab

Use Button Register to add the source system.

Pressing Next

Press Maintain Destination

I only maintain the logon data for tenant SRC on tab Authentication Details.

After pressing Save, I press the X on the upper right corner.

After pressing Finish on the next screen. The tenant database should be registered. A connection test was also successfully done.

Now we can create the role in the IDE on the source system using

URL: http://hxehost-src:8090/sap/hana/ide/editor/

First a package needs to be created. After that, I create Role R22_ADMIN. Creating a Package or Role is done via right mouseclick -> new -> … After creation I added a simple Privilege.

To be able to transport the Package, it needs to be added to a Delivery Unit. To create a new Delivery UNIT, HALM of tenant SRC is used:

http://hxehost-src:8090/sap/hana/xs/lm/?page=DUManagementTab

I created Delivery Unit ROBINSON_R22 and added the Package that I created before.

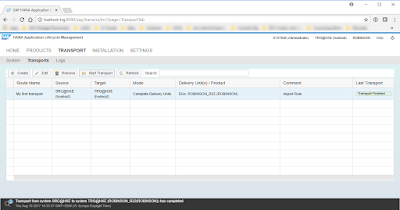

Now, the transport can be done. It has to be started from the target system:

http://hxehost-trg:8090/sap/hana/xs/lm/?page=TransportTab

Push button Create

After pressing Create, the transport is created and is ready to be started.

Mark the transport and press Start Transport

Success!

Now we can check the new Role in the target system.

The role is active in the target system and can be assigned to a user.

That’s it, thank you very much, please like and subscribe Feel free to leave a comment.